Aug 01, 2024

OUTLINE

Introduction

So after developing my golang project, a vanity url mapper for my golang packages, I needed a way to deploy it from my local machine to my server. So this means I would run it as a systemd service. For this, I would make the systemd service run it,

(a) either locally on my server filesystem, or

(b) in a docker container.

Types of systemd service

A systemd service is normally defined in a unit file. The syntax and description of the unit file can be found by running man systemd.unit on any Linux system that supports systemd. So there are conventionally two types of systemd services

(a) System service: This service is enabled for all users that log into the system. To do this, you would normally run this set of commands:

$ sudo cp <SERVICE_NAME>.service /etc/systemd/system/

$ sudo systemctl daemon-rDeploying to run on the filesystemeload

$ sudo systemctl enable <SERVICE_NAME>.service

(b) User service: The service is enabled for only the logged-in user. To do this, you would normally run this set of commands:

$ touch /home/<$USER>/.config/systemd/user/<SERVICE_NAME>.service

$ systemctl --user reload

$ systemctl --user enable --now <SERVICE_NAME>

Enabling the service will then symlink it into the appropriate .wants subdirectory, and it will run only when that user is logged in. This means that if that user is not logged in, systemd might terminate the service.

The systemd user instance is started after the first login of a user and killed after the last session of the user is closed. Sometimes it may be useful to start it right after boot, and keep the systemd user instance running after the last session closes, for instance to have some user process running without any open session

This is bad for long-running transcations/services. So to do that, you would run this

$ loginctl enable-linger $USER

This disconnects that logged-in user specific systemd instance from that logged-in user session.

Attempt One

I did initially not know the difference between the types of systemd services. So I ran these steps:

(a) Created a sample service

[Unit]

Description=A custom url mapper for go packages!

After=network-online.target

[Service]

ExecStart=/opt/gocustomurls -conf /home/vagrant/.config/config.json

SyslogIdentifier=gocustomurls

StandardError=journal

Type=exec

[Install]

WantedBy=multi-user.target

(b) Ran these steps

$ sudo cp gocustomurls.service /etc/systemd/system/

$ sudo systemctl daemon-reload

$ sudo systemctl start gocustomurls.service

$ sudo systemctl status gocustomurls.service

So I got this error

× gocustomurls.service - GocustomUrls. A custom url mapper for go packages!

Loaded: loaded (/etc/systemd/system/gocustomurls.service; disabled; preset: disabled)

Drop-In: /usr/lib/systemd/system/service.d

└─10-timeout-abort.conf

Active: failed (Result: exit-code) since Fri 2024-07-19 03:31:19 UTC; 54s ago

Duration: 23ms

Process: 3027 ExecStart=/opt/gocustomurls -conf /home/vagrant/.config/config.json (code=exited, status=1/FAILURE)

Main PID: 3027 (code=exited, status=1/FAILURE)

CPU: 6ms

$ http --body "http://localhost:7070/x/touche?go-get=1"

<html>

<head>

<meta http-equiv="Content-Type" content="text/html; charset=utf-8"/>

<meta name="go-import" content="scale.dev/x/migrate git https://codeberg.org/Gusted/mCaptcha.git">

<meta name="go-source" content="scale.dev/x/migrate https://codeberg.org/Gusted/mCaptcha.git https://codeberg.org/Gusted/mCaptcha.git/tree/main{/dir} https://codeberg.org/Gusted/mCaptcha.git/blob/main{/dir}/{file}#L{line}">

</head>

</html>

Jul 19 03:31:19 fedoramachine systemd[1]: Starting gocustomurls.service - GocustomUrls. A custom url mapper for go packages!...

Jul 19 03:31:19 fedoramachine systemd[1]: Started gocustomurls.service - GocustomUrls. A custom url mapper for go packages!.

Jul 19 03:31:19 fedoramachine gocustomurls[3027]: 2024/07/19 03:31:19 File: => /home/vagrant/.config/config.json

Jul 19 03:31:19 fedoramachine gocustomurls[3027]: 2024/07/19 03:31:19 Ok: => false

Jul 19 03:31:19 fedoramachine gocustomurls[3027]: 2024/07/19 03:31:19 Warning, generating default config file

Jul 19 03:31:19 fedoramachine gocustomurls[3027]: 2024/07/19 03:31:19 neither $XDG_CONFIG_HOME nor $HOME are defined

Jul 19 03:31:19 fedoramachine systemd[1]: gocustomurls.service: Main process exited, code=exited, status=1/FAILURE

Jul 19 03:31:19 fedoramachine systemd[1]: gocustomurls.service: Failed with result 'exit-code'.

Basically the error is that the $HOME environment variable is empty. This is consistent with some of the properties of system services which are:

-

Their spawned processes inherit NO environment (e.g., in a shell script run by the service, the $HOME environment variable will actually be empty)

-

They run as root by default. As a result, they have root permissions.

Attempt Two

So I tried to fix some of the issues with my first attempt by creating a user service. I went through these steps:

(a) Created a non-root user.

Debian

$ sudo adduser \

--system \ # Non-expiring accounts

--shell /bin/bash \ # the login shell

--comment 'Go Custom Urls Service' \ # removes finger info, making the command non-interactive

--group \ # creates an identically named group as its primary group

--disabled-password --home /home/$USER $USER

Fedora

$ sudo useradd --system --shell /bin/bash --comment 'Go Custom Urls Service' --home-dir /home/gourls -m gourls

(b) Unlocked the account by deleting the password

$ sudo passwd -d gourls // to unlock the account

(c) Fixed the permissions. I used this resource and this resource to generate the required permissions.

$ sudo chown -R gourls:gourls /home/gourls

$ sudo chmod -R 770 /home/gourls

(d) Modified the servcie unit above to change the invocation of the service to a user throught the use of the User= and Group= directives.

[Unit]

Description=GocustomUrls. A custom url mapper for go packages!

After=network-online.target

[Service]

User=gols

Group=gols

ExecStart=/home/gols/gocustomurls -conf /home/gols/.config/gocustomurls/config.json

SyslogIdentifier=gocustomurls

StandardError=journal

Type=simple

WorkingDirectory=/home/gols

Environment=USER=gols HOME=/home/gols

[Install]

WantedBy=multi-user.target

(e) Copied the unit file to the config folder.

$ sudo mkdir -p /home/gols/.config/systemd/user

$ sudo chmod -R 770 /home/gols

$ sudo cp gocustomurls.service /home/gols/.config/systemd/user/

$ sudo chown -R gols:gols /home/gols

(f) Reloaded systemd services

$ su gols

$ systemctl --user daemon-reload

Failed to connect to bus: Permission denied

So after googling this error, I was told to check if my XDG_RUNTIME_DIR and DBUS_SESSION_BUS_ADDRESS was set correctly. So checking,

$ id

uid=993(gols) gid=992(gols) groups=992(gols) context=unconfined_u:unconfined_r:unconfined_t:s0-s0:c0.c1023

$ echo $XDG_RUNTIME_DIR

/run/user/1000

$ echo $DBUS_SESSION_BUS_ADDRESS

unix:path=/run/user/1000/bus

As you can see above, the variables XDG_RUNTIME_DIR and DBUS_SESSION_BUS_ADDRESS are configured with the incorrect user id. So I tried to export them in the user's ~/.bash_profile

$ cat ~/.bash_profile

...

export XDG_RUNTIME_DIR="/run/user/$UID"

export DBUS_SESSION_BUS_ADDRESS="unix:path=${XDG_RUNTIME_DIR}/bus

This did not help. So after googling, I came across this resource and tried that

$ systemctl -M gols@ --user daemon-reload

Failed to connect to bus: Permission denied

So after further googling, I came across this resource. From this resource, there are basically two requirements for systemctl --user command to work which are:

-

The user instance of the systemd daemon must be alive, and

-

systemctl must be able to find it through certain path variables.

For the first condition, the loginctl enable-linger command enables that to happen by starting a user instance of systemd even if the user is not logged in. For the second condition, su or su --login does not set the required variables. To do that, you would normally ssh into that user's session. This is obviously a non-starter for me. But there is a new command called machinectl that obviates that need. So armed with this information, I was ready for another attempt.

Attempt Three

So machinectl is available from systemd-container (Debian/Fedora). So I ran these steps based on this resource and this resource:

$ sudo dnf install systemd-container

$ sudo loginctl enable-linger gols

$ sudo machinectl shell --uid=gols

Connected to the local host. Press ^] three times within 1s to exit session.

$ exit

$ sudo systemctl -M gourls@ --user daemon-reload

$ su gopls

$ echo $XDG_RUNTIME_DIR

/run/user/993

$ echo $DBUS_SESSION_BUS_ADDRESS

unix:path=/run/user/993/bus

In order to the command sudo systemctl -M gourls@ --user daemon-reload to be successful, the variables from the previous attempt must be exported in ~/.bash_profile. Okay, I then ran a journalctl command to check the status of the failed service

$ journalctl --user -u gocustomurls.service

Hint: You are currently not seeing messages from the system.

Users in groups 'adm', 'systemd-journal', 'wheel' can see all messages.

Pass -q to turn off this notice.

No journal files were opened due to insufficient permissions.

So I added the gourls user to the systemd-journal group

$ sudo usermod -a -G systemd-journal gols

$ sudo systemctl -M gols@ --user list-units | grep "gocustom" # list all the units and find gocustom

So far so good. Restarting the service produces an error

$ sudo systemctl -M gourls@ --user restart gocustomurls.service

$ su gols

$ journalctl --user -u gocustomurls.service

Jul 19 07:19:03 fedoramachine systemd[749]: Started gocustomurls.service - GocustomUrls. A custom url mapper for go packages!.

Jul 19 07:19:03 fedoramachine (stomurls)[3291]: gocustomurls.service: Failed to determine supplementary groups: Operation not permitted

Jul 19 07:19:03 fedoramachine systemd[749]: gocustomurls.service: Main process exited, code=exited, status=216/GROUP

Jul 19 07:19:03 fedoramachine systemd[749]: gocustomurls.service: Failed with result 'exit-code'.

Based on the error above, it seemed that the group was incorrect. This observation was also confirmed after viewing this. So I removed the User= , Group= and Environment= directoves from the unit file and restarted the service.

[Unit]

Description=GocustomUrls. A custom url mapper for go packages!

After=network-online.target

[Service]

ExecStart=/home/gols/gocustomurls -conf /home/gols/.config/gocustomurls/config.json

SyslogIdentifier=gocustomurls

StandardError=journal

WorkingDirectory=/home/gols

Type=simple

[Install]

WantedBy=multi-user.target

$ sudo cp gocustomurls.service /home/gols/.config/systemd/user/

$ sudo chown -R gols:gols /home/gols

$ sudo machinectl shell --uid=gols

Connected to the local host. Press ^] three times within 1s to exit session.

$ exit

logout

Connection to the local host terminated.

$ sudo systemctl -M gols@ --user daemon-reload

$ sudo systemctl -M gols@ --user restart gocustomurls.service

$ sudo systemctl -M gols@ --user status gocustomurls.service

× gocustomurls.service - GocustomUrls. A custom url mapper for go packages!

Loaded: loaded (/home/gols/.config/systemd/user/gocustomurls.service; disabled; preset: disabled)

Drop-In: /usr/lib/systemd/user/service.d

└─10-timeout-abort.conf

Active: failed (Result: exit-code) since Fri 2024-07-19 10:02:31 UTC; 8min ago

Duration: 1ms

Process: 2919 ExecStart=/home/gols/gocustomurls -conf /home/gols/.config/gocustomurls/config.json (code=exited, status=203/EXEC)

Main PID: 2919 (code=exited, status=203/EXEC)

CPU: 1ms

The error was because of a missing routes.json. The binary that the gocustomurls.service runs, returns an exit code if routes.json is not found. Adding a rules.json to the folder that is read by the gocustomurls.service returns a success

$ sudo cp rules.json /home/gols/.config/gocustomcurls/

$ sudo systemctl -M gols@ --user daemon-reload

$ sudo systemctl -M gols@ --user restart gocustomurls.service

$ sudo systemctl -M gols@ --user status gocustomurls.service

● gocustomurls.service - GocustomUrls. A custom url mapper for go packages!

Loaded: loaded (/home/gols/.config/systemd/user/gocustomurls.service; disabled; preset: disabled)

Drop-In: /usr/lib/systemd/user/service.d

└─10-timeout-abort.conf

Active: active (running) since Sat 2024-07-20 05:16:31 UTC; 28s ago

Main PID: 3158

Tasks: 6 (limit: 2319)

Memory: 1.7M (peak: 2.0M)

CPU: 4ms

CGroup: /user.slice/user-993.slice/user@993.service/app.slice/gocustomurls.service

└─3158 /home/gols/gocustomurls -conf /home/gols/.config/gocustomurls/config.json

Testing with httpie results in success.

$ sudo dnf install httpie

$ http --body "http://localhost:7070/x/touche?go-get=1"

<html>

<head>

<meta http-equiv="Content-Type" content="text/html; charset=utf-8"/>

<meta name="go-import" content="scale.dev/x/migrate git https://codeberg.org/Gusted/mCaptcha.git">

<meta name="go-source" content="scale.dev/x/migrate https://codeberg.org/Gusted/mCaptcha.git https://codeberg.org/Gusted/mCaptcha.git/tree/main{/dir} https://codeberg.org/Gusted/mCaptcha.git/blob/main{/dir}/{file}#L{line}">

</head>

</html>

Attempt Four

So I was not happy with the amount of configuration needed to get this working. So I decided to just write a system service instead because it seemed like the path of least resistance. So I ran these commends:

$ echo $PATH

/home/vagrant/.asdf/shims:/home/vagrant/.asdf/bin:/home/vagrant/.local/bin:/home/vagrant/bin:/usr/local/bin:/usr/bin:/usr/local/sbin:/usr/sbin

$ sudo go <gocustomurls_binary> /usr/local/bin # to put in the PATH

$ sudo mkdir -p /var/lib/$USER

$ sudo cp gocustomurls.service /etc/systemd/system/

$ sudo mkdir -p /var/lib/$USER

$ sudo useradd --system --comment 'Go Custom Urls Service' --no-create-home $USER

useradd: failed to reset the lastlog entry of UID 992: No such file or directory

$ sudo passwd -d $USER

passwd: password changed.

$ getent passwd $USER

$USER:x:992:991:Go Custom Urls Service:/home/$USER:/bin/bash

$ sudo cp config.json /var/lib/$USER

$ sudo cp rules.json /var/lib/$USER

$ sudo ls /var/lib/$USER/

config.json rules.json

$ sudo chmod -R 770 /var/lib/$USER

$ sudo chown -R $USER:$USER /var/lib/$USER

$ sudo systemctl daemon-reload

$ sudo systemctl start gocustomurls.service

$ sudo systemctl status gocustomurls.service

● gocustomurls.service - GocustomUrls. A custom url mapper for go packages!

Loaded: loaded (/etc/systemd/system/gocustomurls.service; disabled; preset: disabled)

Drop-In: /usr/lib/systemd/system/service.d

└─10-timeout-abort.conf

Active: active (running) since Sat 2024-07-20 06:52:09 UTC; 23s ago

Main PID: 4020 (gocustomurls)

Tasks: 6 (limit: 2319)

Memory: 7.1M (peak: 7.5M)

CPU: 14ms

CGroup: /system.slice/gocustomurls.service

└─4020 /usr/local/bin/gocustomurls -conf /var/lib/gourls/config.json

$ http --body "http://localhost:7070/x/touche?go-get=1"

<html>

<head>

<meta http-equiv="Content-Type" content="text/html; charset=utf-8"/>

<meta name="go-import" content="scale.dev/x/migrate git https://codeberg.org/Gusted/mCaptcha.git">

<meta name="go-source" content="scale.dev/x/migrate https://codeberg.org/Gusted/mCaptcha.git https://codeberg.org/Gusted/mCaptcha.git/tree/main{/dir} https://codeberg.org/Gusted/mCaptcha.git/blob/main{/dir}/{file}#L{line}">

</head>

</html>

Attempt Five (Docker)

So this application generates logs and I wanted to view the logs outside the container. So the application logs generated as I run the container would have to have the same user as the user invoking the docker command to avoid me having to use sudo to view it. Based on the above, the sample docker file would be something like this

# syntax=docker/dockerfile:1

FROM golang:1.20-alpine AS build

WORKDIR /app

RUN apk --update add --no-cache git bash make alpine-sdk g++ && \

git clone https://git.iratusmachina.com/iratusmachina/gocustomurls.git --branch feature/add-log-rotation gurls && \

cd gurls && make build

FROM alpine AS run

ARG USERNAME

ARG UID

ARG GID

ARG PORT

RUN mkdir -p /home/$USERNAME

COPY --from=build /app/gurls/artifacts/gocustomurls /home/$USERNAME/gocustomurls

RUN addgroup -g ${GID} ${USERNAME} && \

adduser --uid ${UID} -G ${USERNAME} --gecos "" --disabled-password --home "/home/$USERNAME" --no-create-home $USERNAME && \

chmod -R 755 /home/$USERNAME && \

chown -R ${UID}:${GID} /home/$USERNAME

ENV HOME=/home/$USERNAME

WORKDIR $HOME

EXPOSE $PORT

USER $USERNAME

CMD ["sh","-c", "/home/${USERNAME}/gocustomurls", "-conf", "${HOME}/config.json"] # based on this link https://github.com/moby/moby/issues/5509#issuecomment-1962309549

So running these set of commands produces an error:

$ docker build -t testimage:alpine --build-arg UID=$(id -u) --build-arg GID=$(id -g) --build-arg USERNAME=$(whoami) --build-arg PORT=7070 --no-cache .

...

=> => naming to docker.io/library/testimage:alpine

$ docker run -d -p 7070:7070 -v ${PWD}:/home/$(whoami) testimage:alpine

d7f5011b4e7f6317757a3ee8fe76fe710f8fd5ed1ac6a6e5c32ad5393c78f4c0

$ http --body "http://localhost:7070/x/touche?go-get=1"

http: error: ConnectionError: HTTPConnectionPool(host='localhost', port=7070): Max retries exceeded with url: /x/touche?go-get=1 (Caused by NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7f986fc73410>: Failed to establish a new connection: [Errno 111] Connection refused')) while doing a GET request to URL: http://localhost:7070/x/touche?go-get=1

So checking the logs,

$ docker container ls -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

d7f5011b4e7f testimage:alpine "sh -c /home/${USERN…" 56 seconds ago Exited (127) 55 seconds ago frosty_leakey

$ docker logs d7f5011b4e7f

-conf: line 0: /home//gocustomurls: not found

So I attempted to fix the above error by putting the CMD command in a start.sh file by doing this:

# syntax=docker/dockerfile:1

...

RUN echo "${HOME}/gocustomurls -conf ${HOME}/config.json" > start.sh \

&& chown ${UID}:${GID} start.sh \

&& chmod u+x start.sh

EXPOSE $PORT

USER $USERNAME

CMD ["sh", "-c", "./start.sh" ]

This also produced an error, after building and running the container as shown below:

$ docker logs e9abe2bed4b1

sh: ./gocustomurls: not found

This perplexed me. So I decided to print the environment with printenv and run ls in the start.sh file. So after doing that, building and re-running the container, I got this:

$ docker logs 98dd740dc727

HOSTNAME=98dd740dc727

SHLVL=2

HOME=/home/vagrant

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

PWD=/

total 12K

drwxr-xr-x 2 vagrant vagrant 61 Jul 21 21:30 .

drwxr-xr-x 1 root root 21 Aug 3 14:06 ..

-rw-r--r-- 1 vagrant vagrant 2.4K Aug 3 14:06 Dockerfile

-rw-r--r-- 1 vagrant vagrant 131 Jul 21 21:18 config.json

-rw-r--r-- 1 vagrant vagrant 471 Jul 21 18:40 rules.json

This shows that the gocustomurls binary was being deleted by the time I wanted to run a container from the image. This was confirmed here . So I instead used the approach shown here . There is also using a data-only volume but that is for another day. So after reading and , I came up with new Dockerfile.

# syntax=docker/dockerfile:1.4

FROM golang:1.20-alpine AS build

WORKDIR /app

RUN <<EOF

apk --update add --no-cache git bash make alpine-sdk g++

git clone https://git.iratusmachina.com/iratusmachina/gocustomurls.git --branch feature/add-log-rotation gurls

cd gurls

make build

EOF

FROM alpine AS run

ARG USERNAME

ARG UID

ARG GID

ARG PORT

RUN <<EOF

addgroup --gid ${GID} ${USERNAME}

adduser --uid ${UID} --ingroup ${USERNAME} --gecos "" --disabled-password --home "/home/$USERNAME" $USERNAME

chmod -R 755 /home/$USERNAME

chown -R ${UID}:${GID} /home/$USERNAME

EOF

COPY --from=build /app/gurls/artifacts/gocustomurls /home/$USERNAME/gocustomurls

RUN <<EOF

mkdir -p /var/lib/apptemp

cp /home/$USERNAME/gocustomurls /var/lib/apptemp/gocustomurls

chown -R ${UID}:${GID} /home/$USERNAME

chown -R ${UID}:${GID} /var/lib/apptemp

ls -lah /home/$USERNAME

EOF

ENV HOME=/home/$USERNAME

COPY <<EOF start.sh

#!/usr/bin/env sh

printenv

cd "${HOME}"

ls -lah .

if [ ! -f "${HOME}/gocustomurls" ]

then

cp /var/lib/apptemp/gocustomurls ${HOME}/gocustomurls

rm /var/lib/apptemp/gocustomurls

fi

${HOME}/gocustomurls -conf ${HOME}/config.json

EOF

RUN <<EOF

chown ${UID}:${GID} start.sh

chmod u+x start.sh

EOF

EXPOSE $PORT

USER $USERNAME

CMD ["sh", "-c", "./start.sh" ]

I also changed the run command from

$ docker run -d -p 7070:7070 -v ${PWD}:/home/$(whoami) testimage:alpine

to

$ docker run -d -p 7070:7070 -v ${PWD}/otherfiles:/home/$(whoami) testimage:alpine

This allows me to exclude the Dockerfile from the image. I also added journald logging to the run command so I can use journalctl to view the logs. Running with this addition is shown below:

$ docker run --log-driver=journald -d -p 7070:7070 -v ${PWD}/otherfiles:/home/$(whoami) testimage:alpine

$ sudo journalctl CONTAINER_NAME=vigilant_mclaren

Aug 03 14:37:19 fedoramachine 561944ae05ca[1228]: HOSTNAME=561944ae05ca

Aug 03 14:37:19 fedoramachine 561944ae05ca[1228]: SHLVL=2

Aug 03 14:37:19 fedoramachine 561944ae05ca[1228]: HOME=/home/vagrant

Aug 03 14:37:19 fedoramachine 561944ae05ca[1228]: PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

Aug 03 14:37:19 fedoramachine 561944ae05ca[1228]: PWD=/

Aug 03 14:37:19 fedoramachine 561944ae05ca[1228]: total 9M

Aug 03 14:37:19 fedoramachine 561944ae05ca[1228]: drwxr-xr-x 2 vagrant vagrant 63 Aug 3 14:36 .

Aug 03 14:37:19 fedoramachine 561944ae05ca[1228]: drwxr-xr-x 1 root root 21 Aug 3 14:29 ..

Aug 03 14:37:19 fedoramachine 561944ae05ca[1228]: -rw-r--r-- 1 vagrant vagrant 131 Jul 21 21:18 config.json

Aug 03 14:37:19 fedoramachine 561944ae05ca[1228]: -rwxr-xr-x 1 vagrant vagrant 9.3M Aug 3 14:31 gocustomurls

Aug 03 14:37:19 fedoramachine 561944ae05ca[1228]: -rw-r--r-- 1 vagrant vagrant 471 Jul 21 18:40 rules.json

This works. So I wanted to see if I could run this Dockerfile from a non-root, non-login account. After moving the requiste files, I ran the build command

bash-5.2$ docker build -t testimage:alpine --build-arg UID=$(id -u) --build-arg GID=$(id -g) --build-arg USERNAME=$(whoami) --build-arg PORT=7070 --no-cache --progress=plain .

ERROR: mkdir /home/golsuser: permission denied

I am using the --progress=plain option to allow me to use echo during the build process. This error is because docker binary cannot find the .docker directory for this user. Normally, this folder is auto-created when you run docker build. So to remove this error, I ran this instead.

bash-5.2$ HOME=${PWD} docker build -t testimage:alpine --build-arg UID=$(id -u) --build-arg GID=$(id -g) --build-arg USERNAME=$(whoami) --build-arg PORT=7070 --no-cache --progress=plain .

This worked. Now I normally run my dockerfiles using Docker Compose. So a sample compose file for the Dockerfile is shown below:

services:

app:

build:

context: .

dockerfile: Dockerfile

args:

- USERNAME=${USERNAME}

- UID=${USER_ID}

- GID=${GROUP_ID}

- PORT=${PORT:-7070}

labels:

- "maintainer=iratusmachina"

image: appimage/gocustomurls

logging:

driver: journald

container_name: app_cont

ports:

- "7070:7070"

volumes:

- ${PWD}/otherfiles:/home/${USERNAME}

I also wanted to run the compose file using systemd. So the systemd unit file is shown below:

[Unit]

Description=GocustomUrls. A custom url mapper for go packages!

After=docker.service

PartOf=docker.service

[Service]

Type=oneshot

RemainAfterExit=yes

WorkingDirectory=/var/lib/golsuser

User=golsuser

Group=golsuser

ExecStart=/bin/bash -c "HOME=/var/lib/golsuser USER_ID=$(id -u) GROUP_ID=$(id -g) USERNAME=$(whoami) docker compose --file /var/lib/golsuser/docker-compose.yml up --detach --force-recreate"

ExecStop=/bin/bash -c "HOME=/var/lib/golsuser USER_ID=$(id -u) GROUP_ID=$(id -g) USERNAME=$(whoami) docker compose --file /var/lib/golsuser/docker-compose.yml down -v"

SyslogIdentifier=gurls

StandardError=journal

[Install]

WantedBy=multi-user.target

So running this service produced this output:

$ sudo systemctl start gurls.service

$ sudo systemctl status gurls.service

● gurls.service - GocustomUrls. A custom url mapper for go packages!

Loaded: loaded (/etc/systemd/system/gurls.service; disabled; preset: disabled)

Drop-In: /usr/lib/systemd/system/service.d

└─10-timeout-abort.conf

Active: active (exited) since Sat 2024-08-03 18:08:46 UTC; 50s ago

Process: 24117 ExecStart=/bin/bash -c HOME=/var/lib/golsuser USER_ID=$(id -u) GROUP_ID=$(id -g) USERNAME=$(whoami) docker compose --file /var/lib/golsuser/docker-compose.yml up --detach --force-recreate (code=exited, status=0/SUCCESS)

Main PID: 24117 (code=exited, status=0/SUCCESS)

CPU: 125ms

Aug 03 18:08:45 fedoramachine gurls[24132]: #17 DONE 0.0s

Aug 03 18:08:45 fedoramachine gurls[24132]: #18 [app] resolving provenance for metadata file

Aug 03 18:08:45 fedoramachine gurls[24132]: #18 DONE 0.0s

Aug 03 18:08:45 fedoramachine gurls[24132]: Network golsuser_default Creating

Aug 03 18:08:45 fedoramachine gurls[24132]: Network golsuser_default Created

Aug 03 18:08:45 fedoramachine gurls[24132]: Container app_cont Creating

Aug 03 18:08:45 fedoramachine gurls[24132]: Container app_cont Created

Aug 03 18:08:45 fedoramachine gurls[24132]: Container app_cont Starting

Aug 03 18:08:46 fedoramachine gurls[24132]: Container app_cont Started

Aug 03 18:08:46 fedoramachine systemd[1]: Finished gurls.service - GocustomUrls. A custom url mapper for go packages!.

$ sudo systemctl stop gurls.service

$ sudo systemctl status gurls.service

○ gurls.service - GocustomUrls. A custom url mapper for go packages!

Loaded: loaded (/etc/systemd/system/gurls.service; disabled; preset: disabled)

Drop-In: /usr/lib/systemd/system/service.d

└─10-timeout-abort.conf

Active: inactive (dead)

Aug 03 18:08:46 fedoramachine systemd[1]: Finished gurls.service - GocustomUrls. A custom url mapper for go packages!.

Aug 03 18:10:10 fedoramachine systemd[1]: Stopping gurls.service - GocustomUrls. A custom url mapper for go packages!...

Aug 03 18:10:10 fedoramachine gurls[24402]: Container app_cont Stopping

Aug 03 18:10:20 fedoramachine gurls[24402]: Container app_cont Stopped

Aug 03 18:10:20 fedoramachine gurls[24402]: Container app_cont Removing

Aug 03 18:10:20 fedoramachine gurls[24402]: Container app_cont Removed

Aug 03 18:10:20 fedoramachine gurls[24402]: Network golsuser_default Removing

Aug 03 18:10:20 fedoramachine gurls[24402]: Network golsuser_default Removed

Aug 03 18:10:20 fedoramachine systemd[1]: gurls.service: Deactivated successfully.

Aug 03 18:10:20 fedoramachine systemd[1]: Stopped gurls.service - GocustomUrls. A custom url mapper for go packages!.

Conclusion

This was a fun yet frustruating experience in trying to piece together how to run services with systemd. I am glad that I went through it so I can reference it next time. I decided to go with the systemd approach (attempt four). With go artifacts being a single binary, the overhead of managing Docker was not worth it for me. If I wanted to remove the systemd service, I could use the commands from this resource.

Mar 16, 2024

OUTLINE

REASONS

So I am using gitea-1.19.4 as well as Woodpecker-0.15.x. The current version(s) of gitea and woodpecker are 1.21.x and 2.3.x respectively.

I wanted to upgrade to these versions because of:

(a) Gitea 1.21.x comes with Gitea Actions enabled by default. THis is similar to Github Actions which I want to learn as we use that at $DAYJOB.

(b) I want to upgrade to a new version every year. This is to make upgrades manageable and repeatable so by doing it more frequently, I would have a runbook for how to upgrade.

(c) These versions (1.19.x, 0.x.x) are no longer actively maintained, which could mean that they are stable but not receiving security fixes which is something I want to avoid.

(d) Woodpecker 2.3.x adds the ability to use Forego which is a community fork of Gitea and runs Codeberg. I may switch to Forego in the future as I may want to hack on it and contribute back to it.

PLAN OF ACTION

Just persuing the Gitea changelong and Woodpecker changelog gives me the sense that a lot has changed between versions. This might mean that the upgrade might be seamless or not. So my plan of action is this:

(a) Copy over my data from the two instances to my local computer

(b) Upgrade Gitea. (Ideally, I would have loved to resize the instances but I am too lazy to do so)

(c) Upgrade Woodpecker (Ideally, I would have loved to resize the instances but I am too lazy to do so)

(d) Test, If botched, then recreate new instances (what a pain in the ass, that would be)

GITEA

For the Gitea instance, I would mainly be upgrading to 1.21.x and with the Woodpecker instance, I would be upgrading to 2.3.x. For Gitea,you would:

- Backup database

- Backup Gitea config

- Backup Gitea data files in APP_DATA_PATH

- Backup Gitea external storage (eg: S3/MinIO or other storages if used)

The steps I have used are taken from here and here.

(a) Define some environment variables

$ export GITEABIN="/usr/local/bin/gitea"

$ export GITEACONF="/etc/gitea/app.ini"

$ export GITEAUSER="git"

$ export GITEAWORKPATH="/var/lib/gitea"

(a) Get current version

$ sudo --user "${GITEAUSER}" "${GITEABIN}" --config "${GITEACONF}" --work-path "${GITEAWORKPATH}" --version | cut -d ' ' -f 3

(b) Get gitea version to install

$ export GITEAVERSION=$(curl --connect-timeout 10 -sL https://dl.gitea.com/gitea/version.json | jq -r .latest.version)

(c) Download version from (b) and uncompress

$ binname="gitea-${GITEAVERSION}-linux-amd64"

$ binurl="https://dl.gitea.com/gitea/${giteaversion}/${binname}.xz"

$ curl --connect-timeout 10 --silent --show-error --fail --location -O "$binurl{,.sha256,.asc}"

$ sha256sum -c "${binname}.xz.sha256"

$ rm "${binname}".xz.{sha256,asc}

$ xz --decompress --force "${binname}.xz"

(d) Flush Gitea queues so that you can properly backup

$ sudo --user "${GITEAUSER}" "${GITEABIN}" --config "${GITEACONF}" --work-path "${GITEAWORKPATH}" manager flush-queues

(e) Stop gitea

$ sudo systemctl stop gitea

(f) Dump database using pg_dump

$ pg_dump -U $USER $DATABASE > gitea-db.sql

(g) Dump the gitea config and files

$ mkdir temp

$ chmod 777 temp # necessary otherwise the dump fails

$ cd temp

$ sudo --user "${GITEAUSER}" "${GITEABIN}" --config "${GITEACONF}" --work-path "${GITEAWORKPATH}" dump --verbose

(h) Move the downloaded gitea binary to the new location

$ sudo cp -f "${GITEABIN}" "${GITEABIN}.bak"

$ sudo mv -f "${binname}" "${GITEABIN}"

(i) Restart Gitea

$ sudo chmod +x "${GITEABIN}"

$ sudo systemctl start gitea

$ sudo systemctl status gitea

To restore as taken from here

$ sudo systemctl stop gitea

$ sudo cp -f "${GITEABIN}.bak" "${GITEABIN}"

$ cd temp

$ unzip gitea-dump-*.zip

$ cd gitea-dump-*

$ sudo mv app.ini "${GITEACONF}"

$ sudo mv data/* "${GITEAWORKPATH}/data/"

$ sudo mv log/* "${GITEAWORKPATH}/log/"

$ sudo mv repos/* "${GITEAWORKPATH}/gitea-repositories/"

$ sudo chown -R gitea:gitea /etc/gitea/conf/app.ini "${GITEAWORKPATH}"

$ cp ../gitea-db.sql .

$ psql -U $USER -d $DATABASE < gitea-db.sql

$ sudo systemctl start gitea

$ sudo systemctl stop gitea

# Regenerate repo hooks

$ sudo --user "${GITEAUSER}" "${GITEABIN}" --config "${GITEACONF}" --work-path "${GITEAWORKPATH}" admin regenerate hooks

WOODPECKER

(a) Stop agent and server

$ sudo systemctl stop woodpecker

$ sudo systemctl stop woodpecker-agent

(b) Backup database with pg_dump

$ pg_dump -U woodpecker woodpeckerdb > woodpecker-db.sql

$ pg_dump -U $USER $DATABASE > woodpecker-db.sql

$ sudo cp /etc/woodpecker-agent.conf woodpecker-agent.conf.bak

$ sudo cp /etc/woodpecker.conf woodpecker.conf.bak

(c) Download the binaries

$ woodpeckerversion=2.3.0

$ binurl="https://github.com/woodpecker-ci/woodpecker/releases/download/v${woodpeckerversion}/"

$ curl --connect-timeout 10 --silent --show-error --fail --location -O "${binurl}woodpecker{,-agent,-cli,-server}_${woodpeckerversion}_amd64.deb"

$ ls

woodpecker-cli_2.3.0_amd64.deb

woodpecker-agent_2.3.0_amd64.deb

woodpecker-server_2.3.0_amd64.deb

(d) Install the new ones

$ sudo dpkg -i ./woodpecker-*.deb

(e) Restart woodpecker

$ sudo systemctl start woodpecker

$ sudo systemctl start woodpecker-agent

(f) Delete back up files

$ sudo rm /etc/woodpecker-agent.conf.bak /etc/woodpecker.conf.bak

Unfortunately I did not find any restore functions for woodpecker. So I did it blindly.

TESTING

As part of the upgrade of the Woodpecker instance from 0.15.x to 2.3.x, the pipeline key in my woodpecker ymls was changed to steps as the pipeline key is deprecated in 2.3.x. So when I tried to test this change in the upgrade, I got this error message

2024/03/17 13:51:54 ...s/webhook/webhook.go:130:handler() [E] Unable to deliver webhook task[265]: unable to deliver webhook task[265] in https://<website-name>/api/hook?access_token=<token> due to error in http client: Post "https://<website-name>/api/hook?access_token=<token>": dial tcp <website-name>: webhook can only call allowed HTTP servers (check your webhook.ALLOWED_HOST_LIST setting), deny '<website-name>(x.x.x.x)'

So I googled the error and saw this. Basically, they reduced the scope of the webook delivery for security reasons by mandating another setting be made in the app.ini file. So I did the easy thing and added this setting below:

[webhook]

ALLOWED_HOST_LIST = *

Now that did not work. So I had to reconstruct my test repository by

- deleting my test repository I had on my Woodpecker instance

- deregistering the OAUTH application on the Gitea instance

- registered a new OAUTH application to give me a new client id and secret

- re-adding my test repository to my Woodpecker instance

When I then pushed a new commit, the builds were picked up. Now, I was not happy with this setting so I looked again at the PR in the link and saw that I could provide the website name for my instance and the ipaddress with the CIDR as values for the ALLOWED_HOST_LIST setting. So to get the ip address with the CIDR setting, I ran ip s a and picked the first non-loopback entry. So the new setting was:

[webhook]

ALLOWED_HOST_LIST = <website-name>,x.x.x.x/x

That worked. I also found this blog post that also does what I did to reduce the attack surface area for the webhooks.

OBSERVATIONS

-

On the whole, the upgrade of Gitea was very smooth as there was an ability to restore if I failed. Both softwares though were easy to upgrade. I initially panicked during the Woodpecker upgrade after receiving errors like this. After I read this, I saw that those errors did not affect the running of my Woodpecker instance.

-

Based on the issues I encountered in testing, I will do the following

- run the upgrades in a local docker-compose instance to iron out all the kinks.

- read all the PRs between the versions to determine what changed.

Aug 01, 2023

OUTLINE

INTRODUCTION

This blog is built with Pelican. Pelican is a python blog generator with no bells and whistles. So the way I normally deploy new blog entries is to:

- add the new blog entries to git

- push the commit from (1) to remote

- ssh into the box where the blog is

- pull down the commit from (2)

- regenerate the blog html

- copy over the blog html from (5) to the location where the webserver(Nginx) serves the blog.

I wanted to automate this using my new Gitea and Woodpecker CI Instance. First, I had to push the code to the Gitea instance. Second, I had to write a pipeline that could build the code and run the steps the outlined above.

PUSHING REPO TO THE GITEA INSTANCE

-

Created an empty repo with the same name (iratusmachina) on the Gitea UI interface

-

Pulled down the empty repo (remote) to my local

$ git clone https://git.iratusmachina.com/iratusmachina/iratusmachina.git

-

Added new remote

$ git remote add gitea <remore-url_from-(1)>

-

Pushed to new remote

$ git push -u gitea <main-branch>

A (NOT-SO) BRIEF EXPLAINER ABOUT SSH

Deploying the blog has increased my knowledge about SSH. Much more than below is described here

Basically to communicate to a shell on a remote box securely, one would use SSH. After connecting to that shell, commands can be sent using SSH to the remote shell to execute commands on the remote host.

So SSH is based on the client-server model, the server program running on the remote host and the client running on the local host (user's system). The server listens for connections on a specific network port, authenticates connection requests, and spawns the appropriate environment if the client on the user's system provides the correct credentials.

Generally to authenticate, public-key/asymmetric cryptography is used, where there is a public and a private key. In SSH, encryption can be done with one of the keys and the decryption would be done with the other key. In SSH, the private and public keys reside on the client while the public key resides on the server.

To generate the public/private key pair, one would run the command

$ ssh-keygen -t ed25519 -C "your_email@example.com" # Here you can use a passpharse to further secure the keys

...

Output

Your identification has been saved in /root/.ssh/id_ed25519.

Your public key has been saved in /root/.ssh/id_ed25519.pub.

...

Then the user could manually copy the public key to the server, that is, append it to this file ~/.ssh/authorized_keys, if the user is already logged into the server. This file is respected by SSH only if it is not writable by anything apart from the owner and root (600 or rw-------). If the user is not logged into the remote server, this command can be used instead

$ ssh-copy-id username@remote_host # ssh-copy-id has to be installed on remote_host

OR

$ cat ~/.ssh/id_ed25519.pub | ssh username@remote_host "mkdir -p ~/.ssh && cat >> ~/.ssh/authorized_keys" # use if ssh-copy-id is not installed on remote-host

Then when you log in, the following sequence of events takes place.

- The SSH client on the local (user's computer) requests SSH-Key based authentication from the server program on the remote host.

- The server program on the remote host looks up the user's public SSH Key from the whitelist file

~/.ssh/authorized_keys.

- The server program on the remote host then creates a challenge (a random string)

- The server program on the remote host encrypts the challenge using the retrieved. public key from (3). This encrypted challenge can only be decrypted with the associated private key.

- The server program on the remote host sends this encrypted challenge to the client on the local (user's computer) to test whether they actually have the associated private key.

- The client on the local (user's computer) decrypts the encrypted challenge from (5) with your private key.

- The client on the local (user's computer) prepares a response and encrypts the response with the user's private key and sends it to the server program on the remote host.

- The to the server program on the remote host receives the encrypted response and decrypts it with the public key from (2). If the challenge matches, this proves that, the client on the local (user's computer), trying to connecf from (1), has the correct private key.

If the private SSH key has a passphrase (to improve security), a prompt would appear to enter the passphrase every time the private SSH key is used, to connect to a remote host.

An SSH agent helps to avoid having to repeatedly do this. This small utility stores the private key after the passpharse has been entered for the first time. It will be available for the duration of user's terminal session, allowing the user to connect in the future without re-entering the passphrase. This is also important if the SSH credentials need to be forwarded. The SSH agent never hands these keys to client programs, but merely presents a socket over which clients can send it data and over which it responds with signed data. A side benefit of this is that the user can use their private key even with programs that the user doesn't fully trust

To start the SSH agent, run this command:

$ eval $(ssh-agent)

Agent pid <number>

Why do we need to use eval instead of just ssh-agent? SSH needs two things in order to use ssh-agent: an ssh-agent instance running in the background, and an environment variable (SSH_AUTH_SOCK) that tells path of the unix file socket that the agent uses for communication with other processes. If you just run ssh-agent, then the agent will start, but SSH will have no idea where to find it.

After that, the private key can then be added to the ssh-agent so that it cann manage the key using this command

$ ssh-add ~/.ssh/id_ed25519

After this, the user can verify that a connection can be made to the remote/server using this command (if the user has not done so before):

$ ssh username@remote_host

...

The authenticity of host '<remote_host> (<remote_host_ip>)' can't be established.

ECDSA key fingerprint is SHA256:OzvpQxRUzSfV9F/ECMXbQ7B7zbK0aTngrhFCBUno65c.

Are you sure you want to continue connecting (yes/no)?

If the user types yes, the SSH client writes the host public key to the known_hosts file ~/.ssh/known_hosts and won’t prompt the user again on the subsequent SSH connections to the remote_host. If the user doesn’t type yes, the connection to the remote_host is prevented. This interactive method allows for server verification to prevent man-in-the-middle attacks

So if you wanted to automate this process: User interaction is essentially limited during automation (CI). So to So another way of testing the connection would be to use the ssh-keyscan utility as shown below:

$ ssh-keyscan -H <remote_host> >> ~/.ssh/known_hosts

The command above adds all the <remote_host> public keys and hashes of the hostnames of the public keys to the known_hosts file. Essentially ssh-keyscan just automates the process of retrieving the public key of the remote_host for inclusion in the known_hosts file without actually logging into the server.

So to run commands on the remote host through ssh, the user can run this command:

$ ssh -o StrictHostKeyChecking=no -i ~/.ssh/id_ed25519 <remote-host>@<remote-host-ip> "<command>"

The StrictHostKeyChecking=no tells the SSH client on the local (user's computer) to trust the <remote-host> key and continue connecting. The -i is used because we are not using the `ssh-agent- to manage the private key and so will have to tell the SSH client on the local (user's computer) where our private key is located.

WRITING THE PIPELINE YML

So I followed in the instructions here. So the general pipeline syntax for 0.15.x is:

pipeline:

<step-name-1>:

image: <image-name of container where the commands witll be run>

commands:

- <First-command>

- <Second-command>

secrets: [ <secret-1>, ... ]

when:

event: [ <events from push, pull_request, tag, deployment, cron, manual> ]

branch: <your branch>

<step-name-2>:

...

The commands are run as a shell script. So the commands are run with this command-line approximation:

$ docker run --entrypoint=build.sh <image-name of container where the commands witll be run>

The when section is a set of conditions. The when section is normally attached to a stage or a pipeline. If the when section is attached to a stage and the set of conditions of the when section are satisfied, then the commands belonging to that stage are run. The same applies for a pipeline.

The final pipeline is shown below:

pipeline:

build:

image: python:3.11-slim

commands:

- apt-get update

- apt-get install -y --no-install-recommends build-essential gcc make rsync openssh-client && apt-mark auto build-essential gcc make rsync openssh-client

- command -v ssh

- mkdir -p ~/.ssh

- chmod 700 ~/.ssh

- echo "$${BLOG_HOST_SSH_PRIVATE_KEY}" > ~/.ssh/bloghost

- echo "$${BLOG_HOST_SSH_PUBLIC_KEY}" > ~/.ssh/bloghost.pub

- chmod 600 ~/.ssh/bloghost

- chmod 600 ~/.ssh/bloghost.pub

- ssh-keyscan -H $${BLOG_HOST_IP_ADDR} >> ~/.ssh/known_hosts

- ls -lh ~/.ssh

- cat ~/.ssh/known_hosts

- python -m venv /opt/venv

- export PATH="/opt/venv/bin:$${PATH}"

- export PYTHONDONTWRITEBYTECODE="1"

- export PYTHONUNBUFFERED="1"

- echo $PATH

- ls -lh

- pip install --no-cache-dir -r requirements.txt

- make publish

- ls output

- rsync output/ -Pav -e "ssh -o StrictHostKeyChecking=no -i ~/.ssh/bloghost" $${BLOG_HOST_SSH_USER}@$${BLOG_HOST_IP_ADDR}:/home/$${BLOG_HOST_SSH_USER}/contoutput

- ssh -o StrictHostKeyChecking=no -i ~/.ssh/bloghost $${BLOG_HOST_SSH_USER}@$${BLOG_HOST_IP_ADDR} "cd ~; sudo cp -r contoutput/. /var/www/iratusmachina.com/"

- rm -rf ~/.ssh

secrets: [ blog_host_ssh_private_key, blog_host_ip_addr, blog_host_ssh_user, blog_host_ssh_public_key ]

when:

event: [ push ]

branch: main

OBSERVATIONS

-

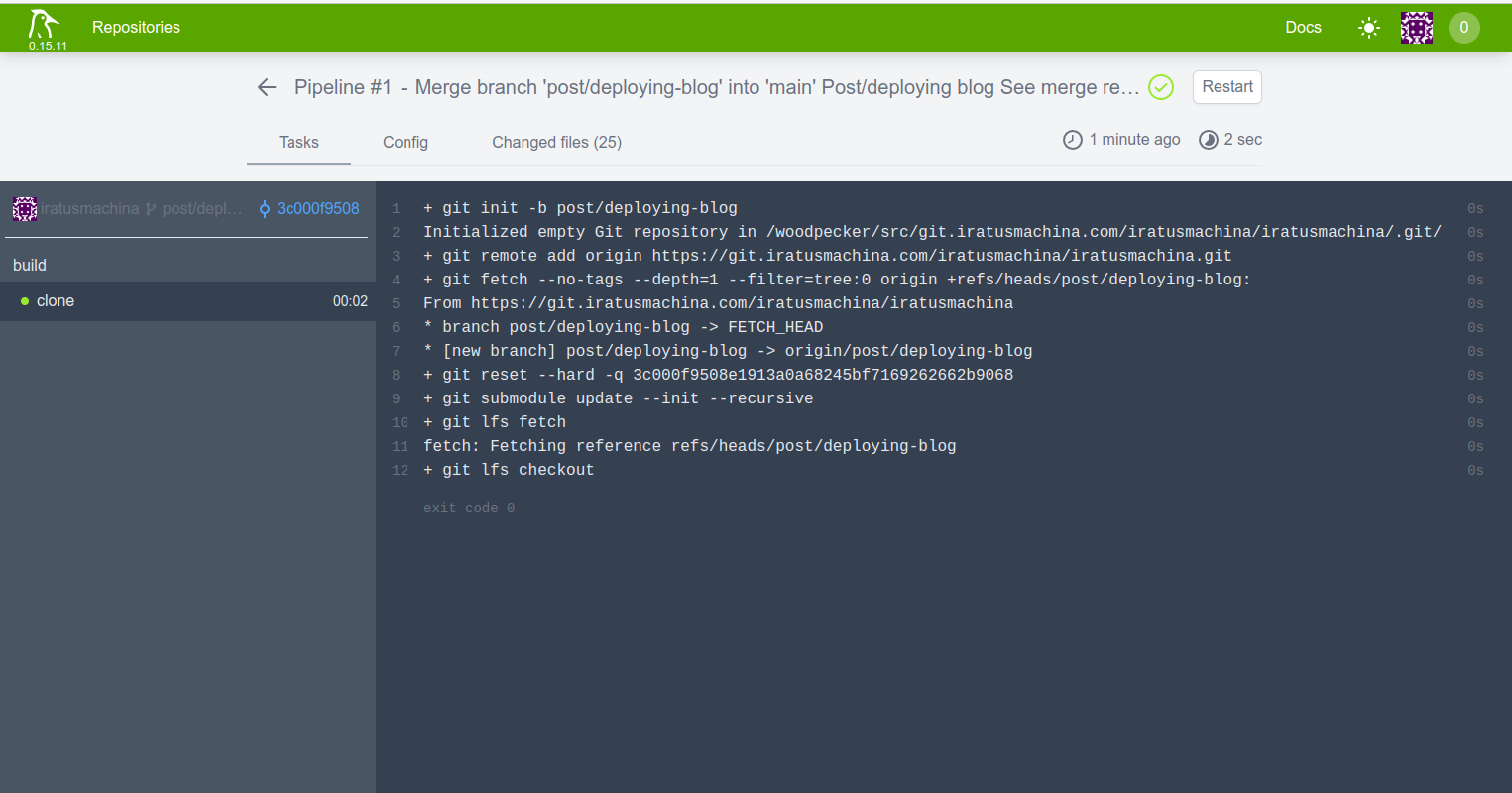

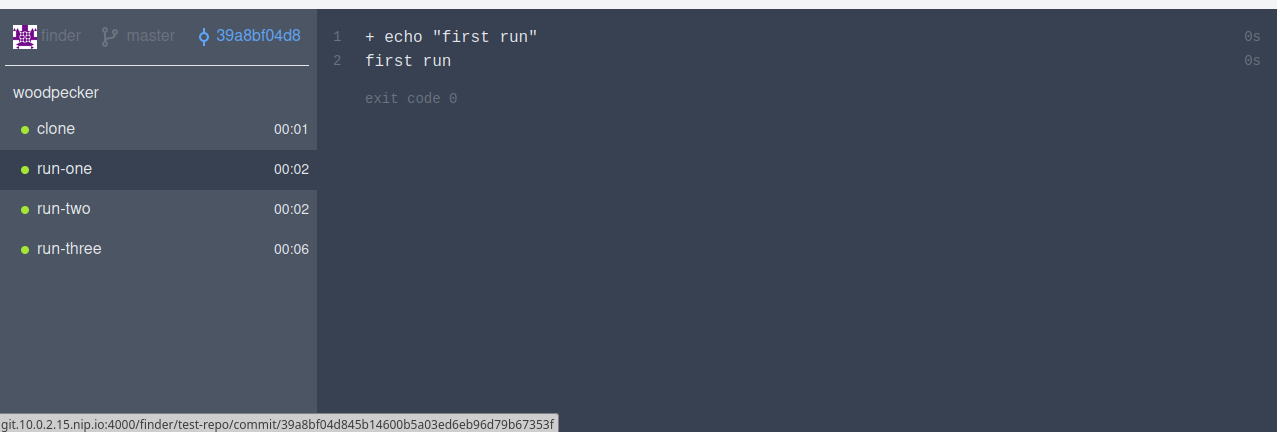

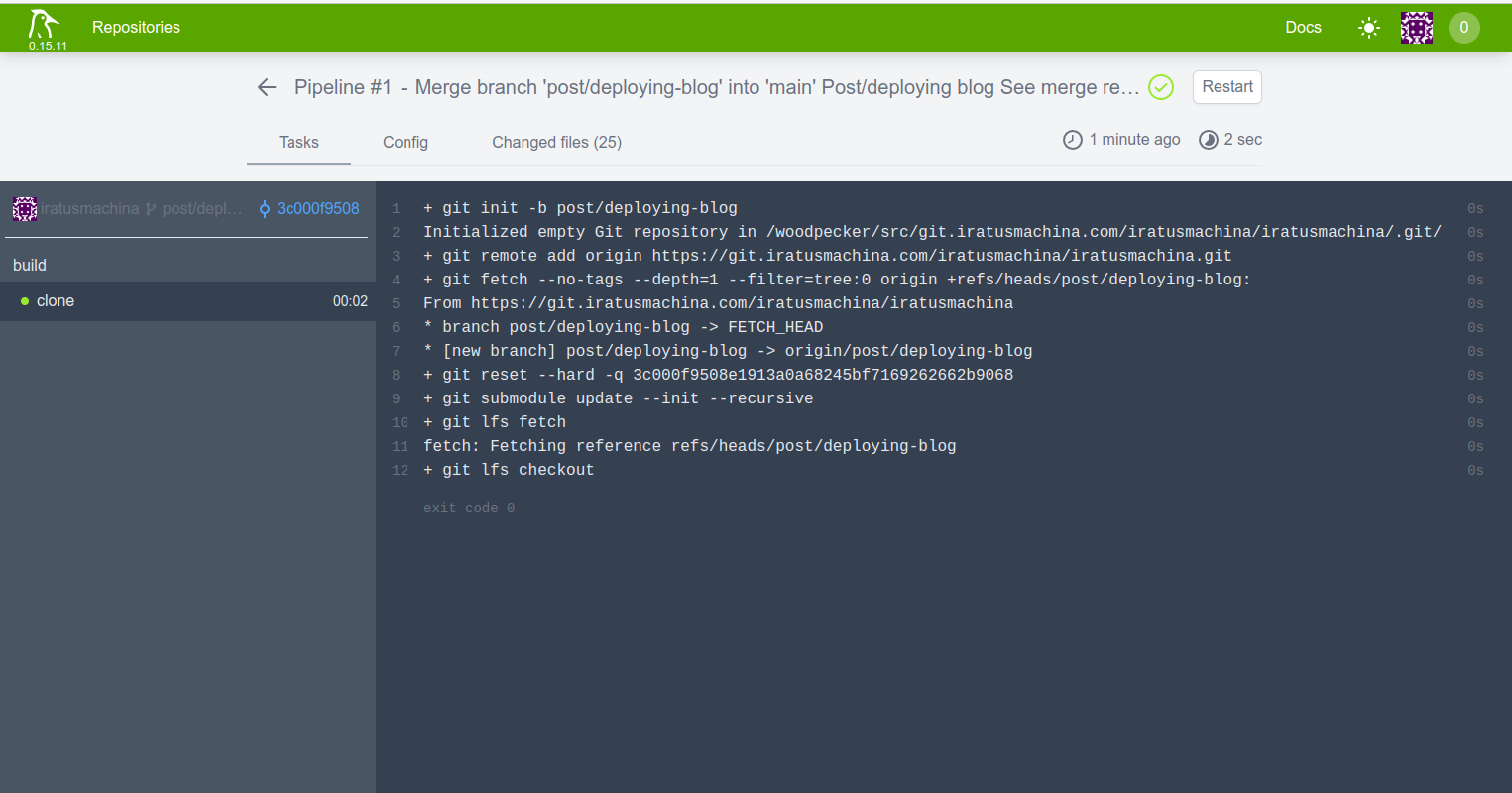

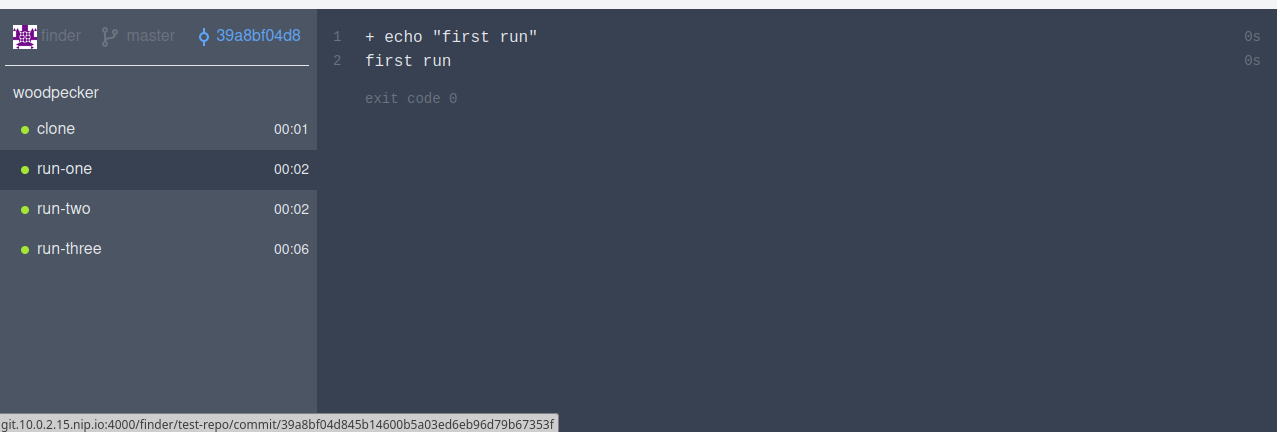

The first thing Woodpecker runs before any stage in a pipeline is the clone step. This clone step pulls down the code from the forge (in this case, the Gitea Instance). That is, it pulls down the branch from the forge, that trigged the webhook that is registered at the Gitea Instance from Woodpecker the clone step on evey push to remote. This branch is pulled into the volume/workspace for the commands to work on. This is shown below:

-

By attaching this when condition,

when:

event: [ push ]

branch: main

to a stage, the commands in the stage get executed on a push to the branch called main. I also had the event pull_request as part of the event list, but I found out that means that the stage run on a creation of a PR into main which is not what I want. If I had some tests, the pull_request would come in handy.

-

I tried to split the commands into different stages but it does not work as shown here

Changes to files are persisted through steps as the same volume is mounted to all steps

I think it means that if you installed something in a previous stage in a pipeline, the command you installed would not be available in the next stage in that pipeline. The Changes to files refer to the files created/modified/deleted directly by commands in the stage.

-

Global when do not seem to work for woodpecker v0.15. A global when is something like this (taken from the previous example):

when:

event: [ <events from push, pull_request, tag, deployment, cron, manual> ]

branch: <your branch>

pipeline:

<step-name-1>:

image: <image-name of container where the commands witll be run>

commands:

- <First-command>

- <Second-command>

secrets: [ <secret-1>, ... ]

<step-name-2>:

...

So a global when applies to all the stages in the pipeline. In my case, it appears the when condition only applied only if associated with a stage.

-

Initially i tried to use run the pipeline defined above with a key pair (passphrase-based). But i ran into connection issues. Using a passphrase-less key pair solved my connection issues.

-

I tried to pass my login user's password over ssh to run commands as part of the sudo group. But I keep getting errors like these:

[sudo] password for ********: Sorry, try again.

[sudo] password for ********:

sudo: no password was provided

sudo: 1 incorrect password attempt

So instead, I made it so that my login user would not require sudo to run the command that I wanted to run. I did this by editing the sudoers file instead.

$ su -

$ cp /etc/sudoers /root/sudoers.bak

$ visudo

$ user ALL=(ALL) NOPASSWD:<command-1>,<command-2> # Add the line at the end of file

$ exit

ENHANCEMENTS

-

Generate the ssh keys for deploying in CI and use them to deploy the blog. That way, it is more secure than the correct method. i would have to delete the generated key from the public server to avoid the known_hosts file from getting too big.

-

Eliminate calling the ubuntu servers/python servers by providing a user-defined image where I would have installed all the software I need (openssh, Pelican, rsync). This would reduce points of failure as well as enabling me to split the stages in the pipeline for better readbility

-

Upgrade to Woodpecker 1.0x from 0.15x

Jul 01, 2023

OUTLINE

INTRODUCTION

CI/CD is a fixture of my job as a software engineer and I wanted to learn more about it. So I looked into self-hosting a git forge and a build server. My criteria , in order, was this:

- easy to adminster

- able to be left alone, that is, it does not need constant maintenance

- easy integration between the forge and build server

- simple UI

Looking the Awesome Self-hosted page on Github, Wikipedia, and on r/selfhosted, I settled on Gitea for the git forge because:

- it is similar to Github in feel

- it has Gitea actions which is based on Github actions. This makes easy for me to learn Github actions later on.

- It has integrations to Gitlab which is another git forge that I use.

As for the build server, I was torn between Jenkins (which I used at a previous job) and Woodpecker CI. I went with Woodpecker CI because:

- It is "docker-based", which was very useful when I was trying it out. This means that each instance of a build is a workspace based on docker. This is similar to what most build systems (AWS, Azure, Github) do.

- It is very simple

- It has a native integration with Gitea.

- It is written in go which is the same as Gitea

Now, I noticed that. most examples that combine, both Gitea and Woodpecker CI, online use a docker-compose approach. I was weary of that path especially when interfacing with Nginx. Since they were both written in go and are single binaries (well, in the case of Woodpecker CI, three binaries), I figured that they should be able to run them using systemd instead of docker. This will help learn linux further and will also interface properly with the Nginx that is installed on the boxes where they would both live.

INSTALL INSTRUCTIONS

I am using Debian, instead of Ubuntu as the OS for the boxes where the Gitea and Woodpecker CI would live. This is to avoid dealing with snaps. So the instructions to set this is as follows:

(1). Create a domain for each. Mine is woodpecker.iratusmachina.com for the Woodpecker CI and ci.iratusmachina.com for the Gitea instance. I use DigitalOCean to host all my projects so domain creation was easy. I just needed to add A records for both.

(2). Ssh into the Woodpecker box. Install docker by following the instructions in these links: here and here

(3). Ssh into the Gitea Box. Download gitea from here

$ wget https://dl.gitea.com/gitea/1.19.4/gitea-1.19.4-linux-amd64

$ sudo mv gitea-1.19.4-linux-amd64 /usr/local/bin/gitea

$ sudo chmod +x /usr/local/bin/gitea

(4). Ssh into the Woodpecker box. Download the woodpecker binaries from here.

$ wget https://github.com/woodpecker-ci/woodpecker/releases/download/v0.15.11/woodpecker-agent_0.15.11_amd64.deb

$ wget https://github.com/woodpecker-ci/woodpecker/releases/download/v0.15.11/woodpecker-cli_0.15.11_amd64.deb

$ wget https://github.com/woodpecker-ci/woodpecker/releases/download/v0.15.11/woodpecker-server_0.15.11_amd64.deb

$ sudo dpkg -i ./woodpecker-*

(5). Create users to allow each application to manage their own data.

# In the Gitea box

$ sudo apt install git

$ sudo adduser --system --shell /bin/bash --gecos 'Git Version Control' --group --disabled-password --home /home/git git

# In the Woodpecker Box

$ sudo adduser --system --shell /bin/bash --gecos 'Woodpecker CI' --group --disabled-password --home /home/<woodpecker-username> <woodpecker-username>

$ sudo usermod -aG docker <woodpecker-username>

(6). Ssh into the Gitea Box.Create directories for gitea based on this link

$ sudo mkdir -p /var/lib/<gitea-username>/{custom,data,log}

$ sudo chown -R git:git /var/lib/<gitea-username>/

$ sudo chmod -R 750 /var/lib/<gitea-username>/

$ sudo mkdir /etc/<gitea-username>

$ sudo chown root:git /etc/<gitea-username>

$ sudo chmod 770 /etc/<gitea-username>

(7). Ssh into the Woodpecker box and create directories for the woodpecker user.

$ sudo mkdir -p /var/lib/<woodpecker-username>

$ sudo chown -R <woodpecker-username>:<woodpecker-username> /var/lib/<woodpecker-username>

$ sudo chmod -R 750 /var/lib/<woodpecker-username>/

$ sudo touch /etc/woodpecker.conf

$ sudo chmod 770 /etc/woodpecker.conf

(8). Set up database with this guide

# In the both boxes

$ sudo apt-get install postgresql postgresql-contrib

$ sudo -u postgres psql

# In the Gitea Box

postgres=# CREATE ROLE <gitea-username> WITH LOGIN PASSWORD '<gitea-password>';

postgres=# CREATE DATABASE <gitea-database-name> WITH OWNER <gitea-username> TEMPLATE template0 ENCODING UTF8 LC_COLLATE 'en_US.UTF-8' LC_CTYPE 'en_US.UTF-8';

# In the Woodpecker Box

postgres=# CREATE ROLE <woodpecker-username> WITH LOGIN PASSWORD '<woodpecker-database-name>';

postgres=# CREATE DATABASE <woodpecker-database-name> WITH OWNER <woodpecker-username> TEMPLATE template0 ENCODING UTF8 LC_COLLATE 'en_US.UTF-8' LC_CTYPE 'en_US.UTF-8';

# In both boxes

postgres=# exit;

Add the following lines to the your hba_conf

# In both boxes

$ sudo -u postgres psql

postgres=# SHOW hba_file;

hba_file

-------------------------------------

/etc/postgresql/14/main/pg_hba.conf

(1 row)

postgres=# exit;

# In Gitea Box

$ sudo nano -c /etc/postgresql/14/main/pg_hba.conf

# Database administrative login by Unix domain socket

local <gitea-database-name> <gitea-username> scram-sha-256

# In Woodpecker Box

$ sudo nano -c /etc/postgresql/14/main/pg_hba.conf

# Database administrative login by Unix domain socket

local <woodpecker-database-name> <woodpecker-username> scram-sha-256

(9). Ssh into the Gitea box. Follow

this guide to set up the gitea as a systemd service

$ sudo wget https://raw.githubusercontent.com/go-gitea/gitea/main/contrib/systemd/gitea.service -P /etc/systemd/system/

$ sudo nano -c /etc/systemd/system/gitea.service // Uncomment all the postgresql stuff

(10). Start the gitea service with the command sudo systemctl start gitea, Do not worry if you get a failure message like this

Job for gitea.service failed because a timeout was exceeded.

See "systemctl status gitea.service" and "journalctl -xeu gitea.service" for details.

(11). The Gitea server runs on port 3000. Open http://localhost:3000 to configure database settings and create your first admin user for Gitea.

(12). Create the nginx config for the Gitea service.

$ sudo nano -c /etc/nginx/sites-available/gitea.conf

server {

listen 80;

server_name git.YOUR_DOMAIN;

location / {

proxy_pass http://localhost:3000;

proxy_http_version 1.1;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header Connection "";

chunked_transfer_encoding off;

proxy_buffering off;

}

}

(13). Edit the /etc/gitea/app.ini to resemble the below:

$ sudo nano -c /etc/gitea/app.ini

....

[server]

SSH_DOMAIN = git.YOUR_DOMAIN

DOMAIN = git.YOUR_DOMAIN

HTTP_PORT = 3000

ROOT_URL = http://git.YOUR_DOMAIN/

---

(14). Enable the domain and restart the box.

$ sudo ln -s /etc/nginx/sites-available/gitea.conf /etc/nginx/sites-enabled/

$ sudo reboot

(15). Relogin and restart the gitea service.

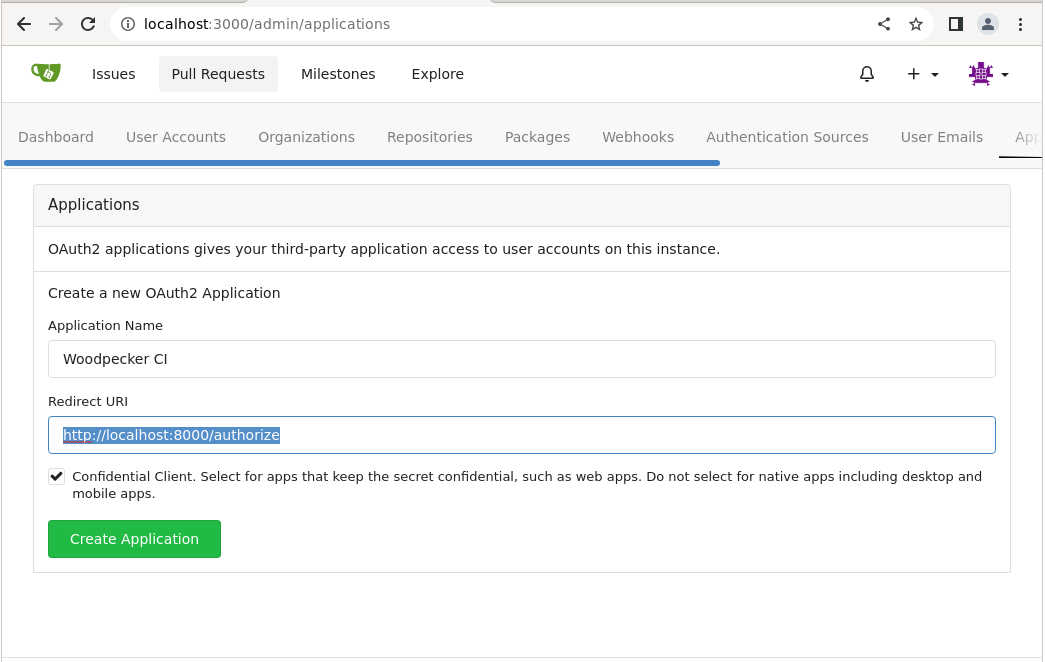

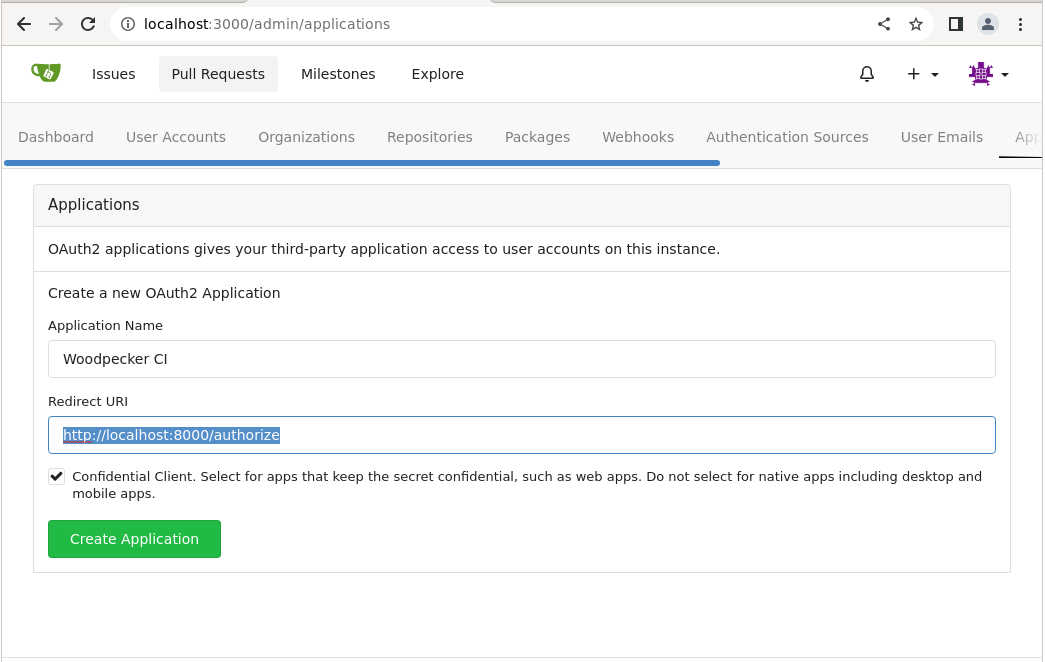

(16). Navigate to http://git.YOUR_DOMAIN/admin/applications to configure to the Oauth application as shown below:

Replace the hightlighted line in the picture above with http://build.YOUR_DOMAIN/authorize.Save the Client Id and Client Secret.

(17). Ssh into the Woodpecker box. Create the nginx config for the Woodpecker CI service.

$ sudo nano -c /etc/nginx/sites-available/woodpecker.conf

server {

listen 80;

server_name build.YOUR_DOMAIN;

location / {

proxy_set_header X-Forwarded-For $remote_addr;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header Host $http_host;

proxy_pass http://127.0.0.1:8000;

proxy_redirect off;

proxy_http_version 1.1;

proxy_set_header Connection "";

proxy_buffering off;

chunked_transfer_encoding off;

}

}

(18). Enable the domain and restart the box.

$ sudo ln -s /etc/nginx/sites-available/woodpecker.conf /etc/nginx/sites-enabled/

$ sudo reboot

(19). Ssh into Woodpecker Box. Open a file /etc/systemd/system/woodpecker.service and paste the following:

$ sudo nano -c /etc/systemd/system/woodpecker.service

[Unit]

Description=Woodpecker

Documentation=https://woodpecker-ci.org/docs/intro

Requires=network-online.target

After=network-online.target

[Service]

User=woodpecker

Group=woodpecker

EnvironmentFile=/etc/woodpecker.conf

ExecStart=/usr/local/bin/woodpecker-server

RestartSec=5

Restart=on-failure

SyslogIdentifier=woodpecker-server

WorkingDirectory=/var/lib/woodpecker

[Install]

WantedBy=multi-user.target

(20). Open another file and pass the following:

WOODPECKER_OPEN=true

WOODPECKER_HOST=http://build.YOUR_DOMAIN

WOODPECKER_GITEA=true

WOODPECKER_GITEA_URL=http://git.YOUR_DOMAIN

WOODPECKER_GITEA_CLIENT=<Client ID from 16>

WOODPECKER_GITEA_SECRET=<Client Secret from 16>

WOODPECKER_GITEA_SKIP_VERIFY=true

WOODPECKER_DATABASE_DRIVER=postgres

WOODPECKER_LOG_LEVEL=info

WOODPECKER_DATABASE_DATASOURCE=postgres://<woodpecker-username>:<woodpecker-db-password>@127.0.0.1:5432/<woodpecker-db-username>?sslmode=disable

GODEBUG=netdns=go

(21). Start the woodpecker service and navigate to http://build.YOUR_DOMAIN. Click the login button. It will take to a page where you can authorize woodpecker to receive webhooks.

(22). Stop the woodpecker service

$ sudo systemctl stop woodpecker

(23). Generate a long random string to be used a secret for the woodpecker-agent to communicate with the woodpecker-server with this command

(24). Add that secret from step (23) to the /etc/woodpecker.conf as follows:

...

WOODPECKER_AGENT_SECRET=GENERATED_SECRET

(25). Open file /etc/systemd/system/woodpecker-agent.service and add this

$ sudo nano -c /etc/systemd/system/woodpecker-agent.service

[Unit]

Description=Woodpecker

Documentation=https://woodpecker-ci.org/docs/intro

Requires=network-online.target

After=network-online.target

[Service]

User=woodpecker

Group=woodpecker

EnvironmentFile=/etc/woodpecker-agent.conf

ExecStart=/usr/local/bin/woodpecker-agent

RestartSec=5

Restart=on-failure

SyslogIdentifier=woodpecker-agent

WorkingDirectory=/var/lib/woodpecker

[Install]

WantedBy=multi-user.target

(26). Open file /etc/woodpecker-agent.conf and add the same secret from step (23).

WOODPECKER_SERVER=localhost:9000

WOODPECKER_BACKEND=docker

WOODPECKER_AGENT_SECRET=GENERATED_SECRET

(27). Restart woodpecker-server

$ sudo systemctl start woodpecker`

(28). Start woodpecker-agent with

$ sudo systemctl start woodpecker-agent

(29). Reboot the two boxes.

(30). To add https, install certbot and select all default choices

# In both boxes

$ sudo apt-get install certbot python3-certbot-nginx

$ sudo certbot --nginx

$ sudo systemctl restart nginx

(31). Modify the /etc/gitea/app.ini to add https

$ sudo nano -c /etc/gitea/app.ini

....

[server]

SSH_DOMAIN = git.YOUR_DOMAIN

DOMAIN = git.YOUR_DOMAIN

HTTP_PORT = 3000

ROOT_URL = https://git.YOUR_DOMAIN/

(32). Modify the /etc/woodpecker.conf to add the https urls

WOODPECKER_HOST=https://build.YOUR_DOMAIN

...

WOODPECKER_GITEA_URL=https://git.YOUR_DOMAIN

...

(33). Enable all the services to start on boot

# In the Gitea box

$ sudo systemctl enable gitea

# In the Woodpecker box

$ sudo systemctl enable woodpecker

$ sudo systemctl enable woodpecker-agent

TESTING INSTRUCTIONS

For testing this setup, follow these instructions:

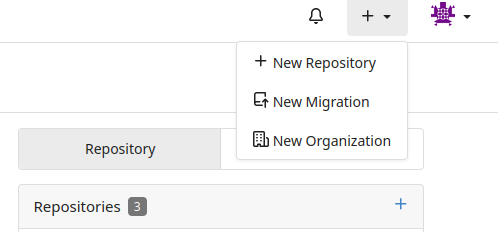

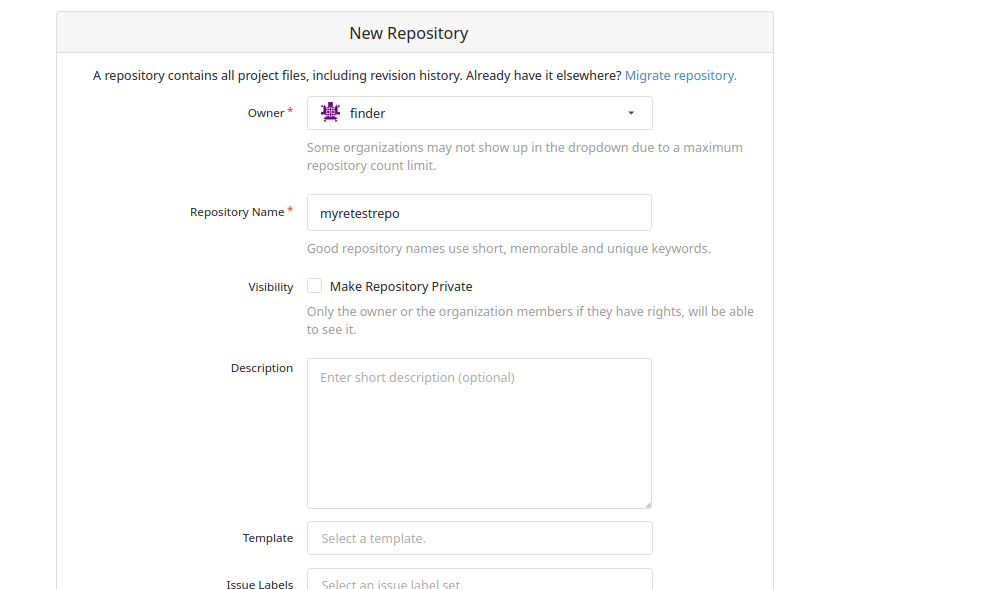

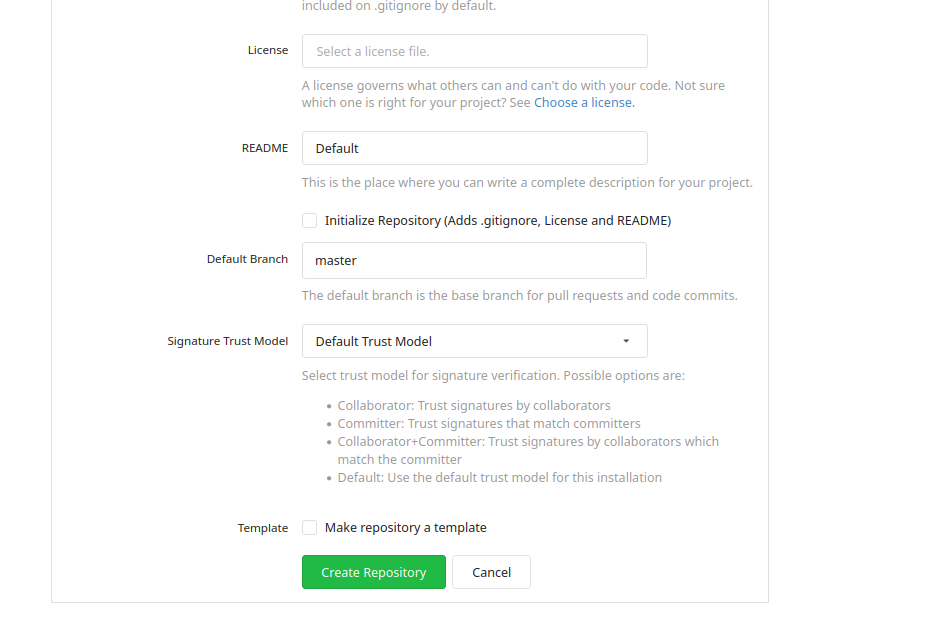

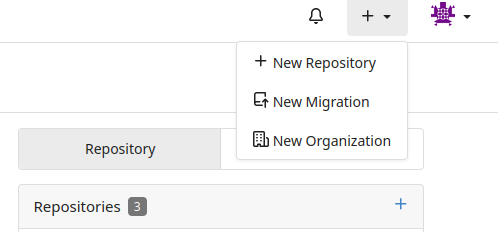

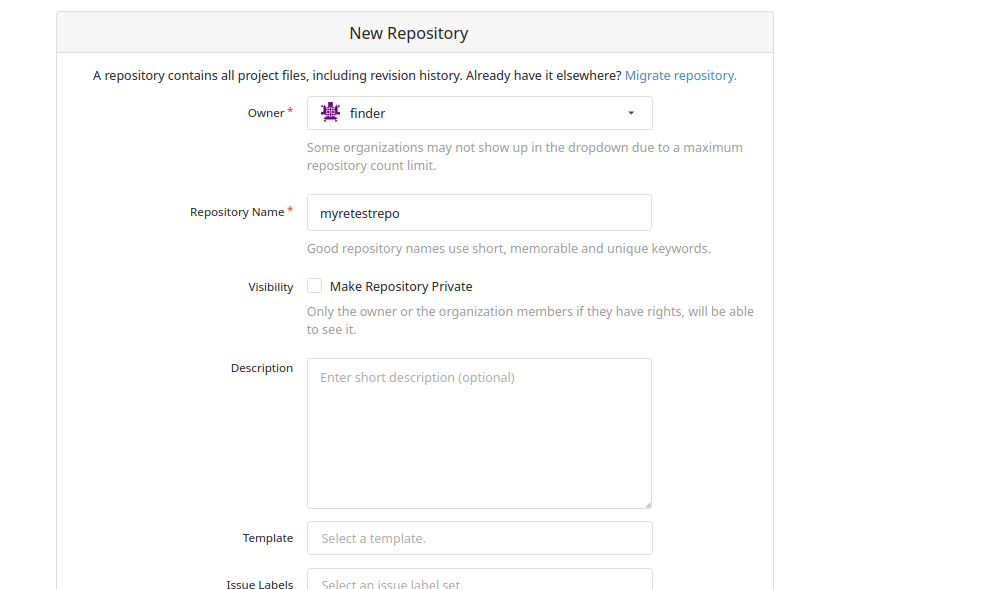

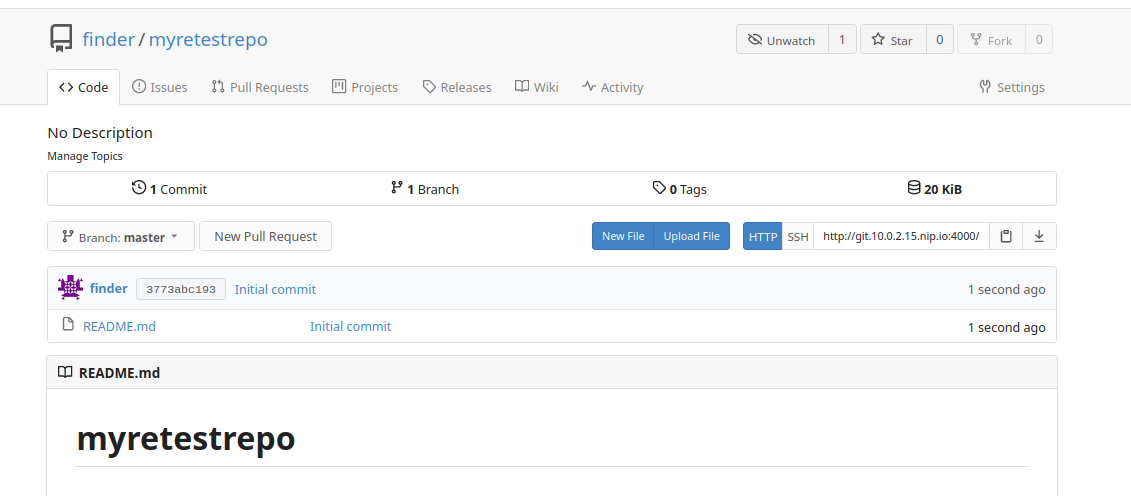

(a) Go to the Gitea UI. Select "+". There should be a dropdown with some options. Select "New Repository"

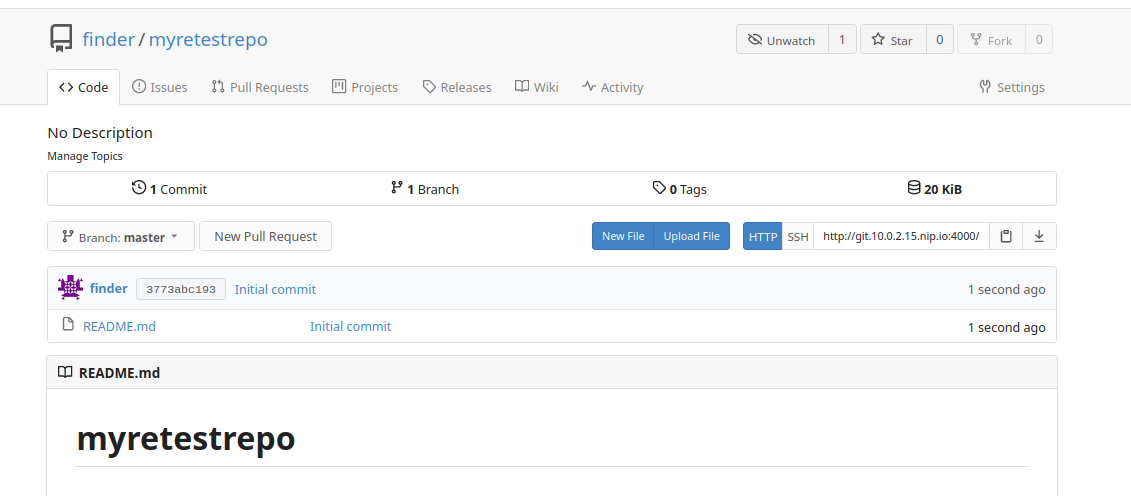

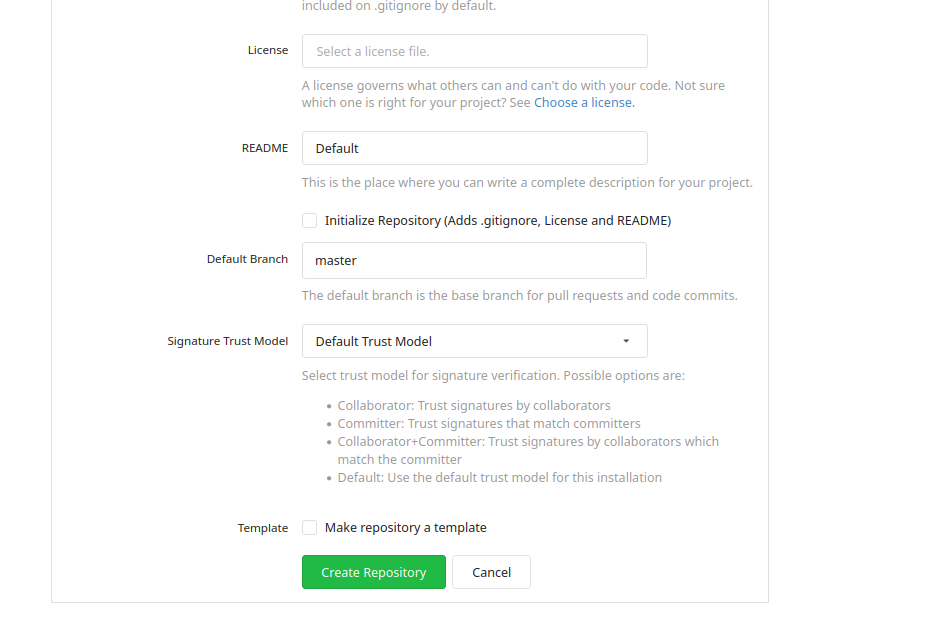

(b) Fill out the form and select the checkbox "Initialize Repository". Click the button "Create Repository".

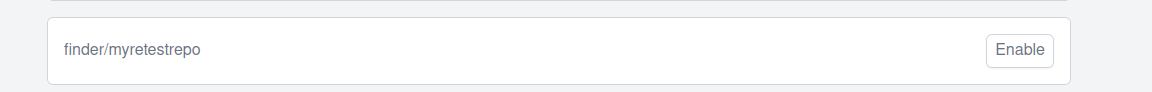

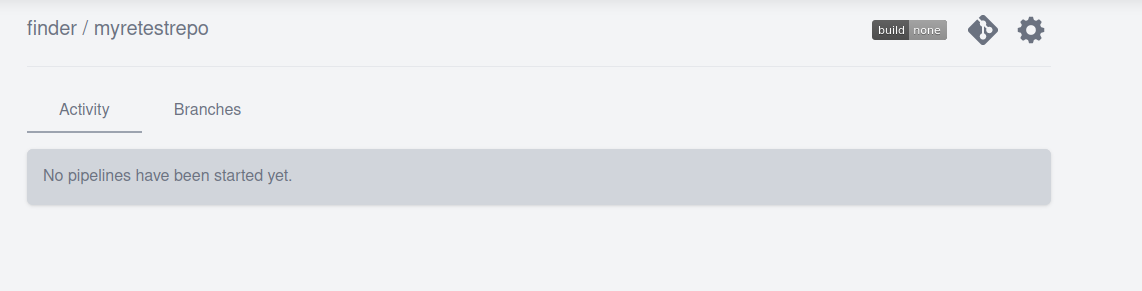

(c) Go back to the Woodpecker CI UI. And click "Add repository".

(d) Then click "Reload Repositories" and your repository should appear.

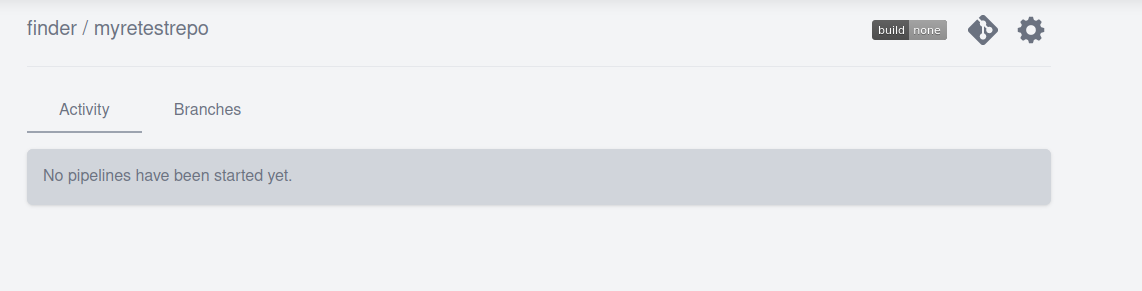

(e) Then click "Enable".

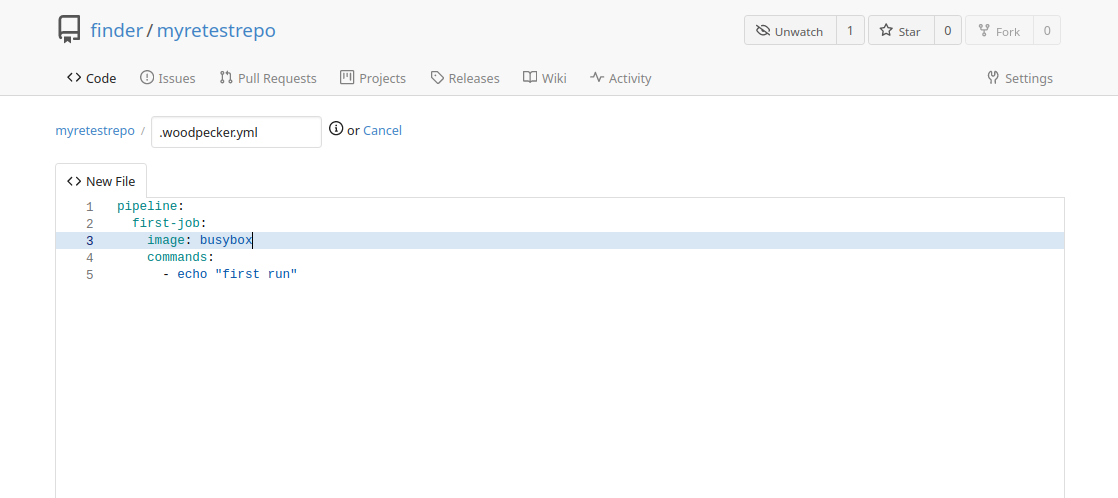

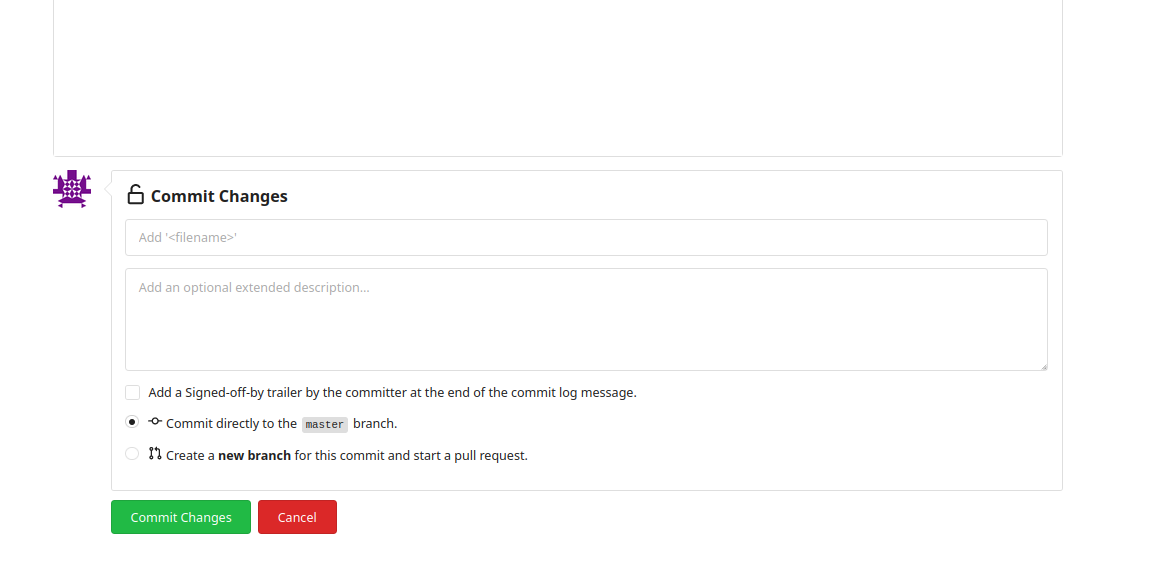

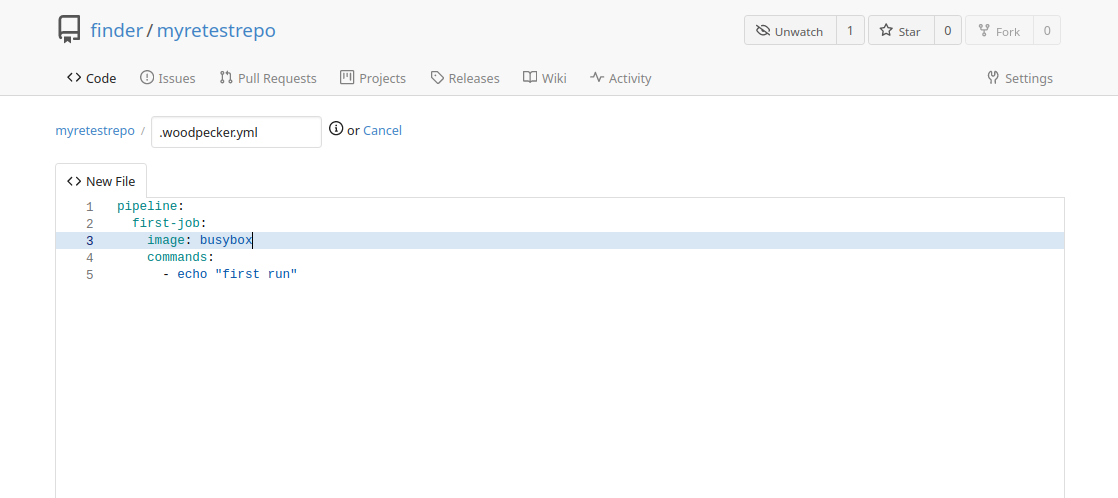

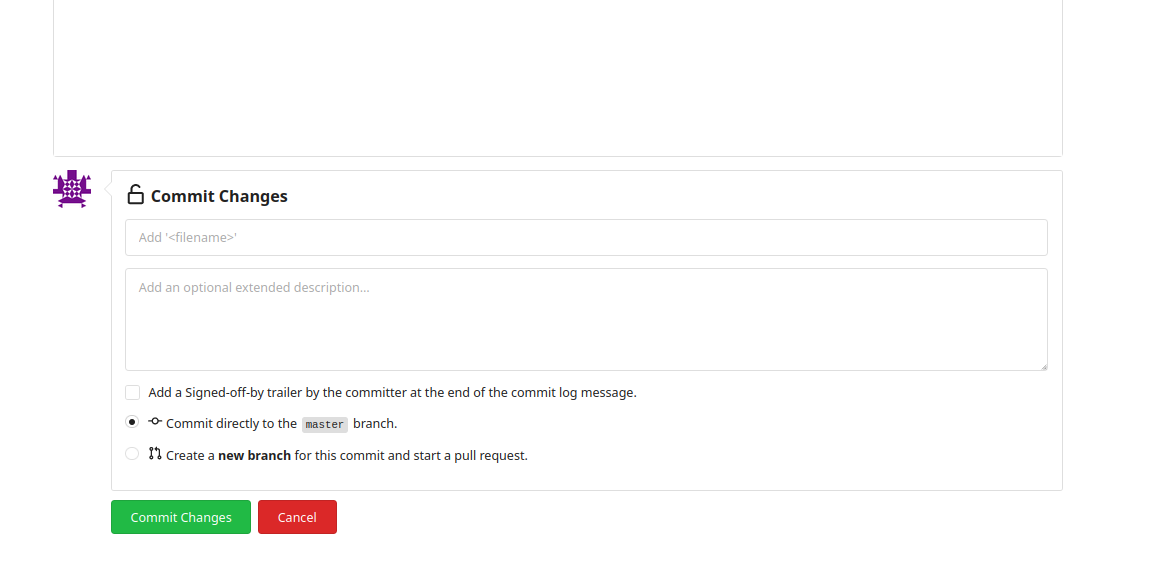

(f) Head back to the Gitea UI in (b) and click "New File". Add a sample pipeline.yml from this link

pipeline:

run-one:

image: busybox

group: first

commands:

- echo "first run"

run-two:

image: busybox

group: first

commands:

- echo "second run"

run-three:

image: ubuntu

commands:

- echo hi

when:

branch:

include: [ master, release/* ]

exclude: [ test/1.0.0, test/1.1.* ]

(g) Commit the file to the master branch. Head to the Woodpecker CI UI and you should see that your build should have completed.

OBSERVATIONS

(a) I had initially wanted to run both of them, the Gitea instance and Woodpecker CI on the same host. I tried running both to them locally testing with localhost but I never went past step 21 of the installation guide. I kept getting errors like below and is referenced below this

{"level":"error","time":"2022-12-xxxx","message":"cannot authenticate user. Post \"http://localhost.lan/login/oauth/access_token\": dial tcp 127.0.0.1:80: connect: connection refused"}

This error magically did not show up when running in docker. Since I was not running in docker for my production setup, I had to run both on their own host

(b) So when you go to the Gitea UI fron the installation steps above, you would see this:

So based on this, anyone can register for an account at your service. The side effect is that any new account has automatic admin priviledges upon creation, which is described here. If you want to disable signups, you would set this attribute in the etc/gitea/app.ini according to this link

...

[service]

...

DISABLE_REGISTRATION = true

...

Sep 22, 2021

At work, I started playing with Azure. It is so different to AWS. More on that in subsequent posts.

We are supposed to set up a CI/CD pipeline with Jenkins as the build tool instead of Azure. The reason for that is to deploy Azure functions, the equivalent to AWS Lambda.

So I am not familiar with Jenkins. I then decided to set up Jenkins on DigitalOcean. The reason being is that DigitalOcean is my preferred Cloud prvoider for any hands-on devops work.

The steps assume that you have already followed these instructions and these instructions. The steps that I took are as follows:

-

Upgrade everything. For me, that means running a script. The script, aptly named upgrade.sh is shown below.

#!/bin/bash

echo "Upgrading"

sudo DEBIAN_FRONTEND=noninteractive apt-get -yq update

sudo DEBIAN_FRONTEND=noninteractive apt-get -yq dist-upgrade

echo "Cleaning up"

sudo apt-get -yf install &&

sudo apt-get -y autoremove &&

sudo apt-get -y autoclean &&

sudo apt-get -y clean

-

Install jenkins key

wget -q -O - https://pkg.jenkins.io/debian-stable/jenkins.io.key | sudo apt-key add -

-

Install the apt repository

sudo sh -c 'echo deb http://pkg.jenkins.io/debian-stable binary/ > /etc/apt/sources.list.d/jenkins.list'

-

Run (1)

-

Install jre and jdk. They have to be installed before installing jenkins because jenkins depends on them to run.

sudo apt install default-jre

sudo apt install default-jdk

-

Install jenkins.

sudo apt install jenkins

-

Start the jenkins server and allow jenkins into the firewall(ufw). The default port for jenkins is port 8080.

sudo systemctl start jenkins

sudo systemctl status jenkins

sudo ufw allow 8080

sudo ufw status

-

Go the browser http://localhost.com:8080. Follow in the instructions in the Setup wizard and Plugins and Admin user.

The url for the instance can be found here

grep jenkinsUrl /var/lib/jenkins/*.xml

So Jenkins is setup. But it is on a http endpoint, instead of https. I wanted to set up the jenkins instance on the same droplet that hosts this website and set it up with a https endpoint. To do this, I followed this steps below:

- Set up a different sub-domain by following these instructions. Apparently I thought I needed to add a CNAME record. That is not needed.

-

Create a new domain file.

sudo cp /etc/nginx/sites-available/default /etc/nginx/sites-available/example.com

-

Modify the domain file to look like this

server {

access_log /var/log/nginx/jenkins.access.log;

error_log /var/log/nginx/jenkins.error.log;

location / {

include /etc/nginx/proxy_params;

proxy_pass http://locahost:8080;

proxy_read_timeout 90s;

proxy_redirect http://localhost:8080 https://example.com;

}

}

-

Run the config test.

sudo nginx -t

-

Link the domain file in sites-available to sites-enabled. It needs to be done to allow nginx to load your config

sudo ln -s /etc/nginx/sites-available/example.com /etc/nginx/sites-enabled

-

Modify the JENKINS_ARGS in /etc/default/jenkins file to look like below:

JENKINS_ARGS="--webroot=/var/cache/$NAME/war --httpPort=$HTTP_PORT --httpListenAddress=127.0.0.1"

-

Modify the url for the jenkins instance by modifying the jenkinsUrl in the file /var/lib/jenkins/jenkins.model.JenkinsLocationConfiguration.xml to look like this:

<jenkinsUrl>http://127.0.0.1:8080/</jenkinsUrl>

-

Run the certbot program and enter 1 when prompted.

sudo certbox --nginx -d example.com