Deploying the blog

OUTLINE

- Introduction

- Pushing repo to the gitea instance

- A (not-so) brief explainer about ssh

- Writing the pipeline.yml

- Observations

- Enhancments

INTRODUCTION

This blog is built with Pelican. Pelican is a python blog generator with no bells and whistles. So the way I normally deploy new blog entries is to:

- add the new blog entries to git

- push the commit from (1) to remote

- ssh into the box where the blog is

- pull down the commit from (2)

- regenerate the blog html

- copy over the blog html from (5) to the location where the webserver(Nginx) serves the blog.

I wanted to automate this using my new Gitea and Woodpecker CI Instance. First, I had to push the code to the Gitea instance. Second, I had to write a pipeline that could build the code and run the steps the outlined above.

PUSHING REPO TO THE GITEA INSTANCE

-

Created an empty repo with the same name (iratusmachina) on the Gitea UI interface

-

Pulled down the empty repo (remote) to my local

$ git clone https://git.iratusmachina.com/iratusmachina/iratusmachina.git -

Added new remote

$ git remote add gitea <remore-url_from-(1)> -

Pushed to new remote

$ git push -u gitea <main-branch>

A (NOT-SO) BRIEF EXPLAINER ABOUT SSH

Deploying the blog has increased my knowledge about SSH. Much more than below is described here

Basically to communicate to a shell on a remote box securely, one would use SSH. After connecting to that shell, commands can be sent using SSH to the remote shell to execute commands on the remote host.

So SSH is based on the client-server model, the server program running on the remote host and the client running on the local host (user's system). The server listens for connections on a specific network port, authenticates connection requests, and spawns the appropriate environment if the client on the user's system provides the correct credentials.

Generally to authenticate, public-key/asymmetric cryptography is used, where there is a public and a private key. In SSH, encryption can be done with one of the keys and the decryption would be done with the other key. In SSH, the private and public keys reside on the client while the public key resides on the server.

To generate the public/private key pair, one would run the command

$ ssh-keygen -t ed25519 -C "your_email@example.com" # Here you can use a passpharse to further secure the keys

...

Output

Your identification has been saved in /root/.ssh/id_ed25519.

Your public key has been saved in /root/.ssh/id_ed25519.pub.

...

Then the user could manually copy the public key to the server, that is, append it to this file ~/.ssh/authorized_keys, if the user is already logged into the server. This file is respected by SSH only if it is not writable by anything apart from the owner and root (600 or rw-------). If the user is not logged into the remote server, this command can be used instead

$ ssh-copy-id username@remote_host # ssh-copy-id has to be installed on remote_host

OR

$ cat ~/.ssh/id_ed25519.pub | ssh username@remote_host "mkdir -p ~/.ssh && cat >> ~/.ssh/authorized_keys" # use if ssh-copy-id is not installed on remote-host

Then when you log in, the following sequence of events takes place.

- The SSH client on the local (user's computer) requests SSH-Key based authentication from the server program on the remote host.

- The server program on the remote host looks up the user's public SSH Key from the whitelist file

~/.ssh/authorized_keys. - The server program on the remote host then creates a challenge (a random string)

- The server program on the remote host encrypts the challenge using the retrieved. public key from (3). This encrypted challenge can only be decrypted with the associated private key.

- The server program on the remote host sends this encrypted challenge to the client on the local (user's computer) to test whether they actually have the associated private key.

- The client on the local (user's computer) decrypts the encrypted challenge from (5) with your private key.

- The client on the local (user's computer) prepares a response and encrypts the response with the user's private key and sends it to the server program on the remote host.

- The to the server program on the remote host receives the encrypted response and decrypts it with the public key from (2). If the challenge matches, this proves that, the client on the local (user's computer), trying to connecf from (1), has the correct private key.

If the private SSH key has a passphrase (to improve security), a prompt would appear to enter the passphrase every time the private SSH key is used, to connect to a remote host.

An SSH agent helps to avoid having to repeatedly do this. This small utility stores the private key after the passpharse has been entered for the first time. It will be available for the duration of user's terminal session, allowing the user to connect in the future without re-entering the passphrase. This is also important if the SSH credentials need to be forwarded. The SSH agent never hands these keys to client programs, but merely presents a socket over which clients can send it data and over which it responds with signed data. A side benefit of this is that the user can use their private key even with programs that the user doesn't fully trust

To start the SSH agent, run this command:

$ eval $(ssh-agent)

Agent pid <number>

Why do we need to use eval instead of just ssh-agent? SSH needs two things in order to use ssh-agent: an ssh-agent instance running in the background, and an environment variable (SSH_AUTH_SOCK) that tells path of the unix file socket that the agent uses for communication with other processes. If you just run ssh-agent, then the agent will start, but SSH will have no idea where to find it.

After that, the private key can then be added to the ssh-agent so that it cann manage the key using this command

$ ssh-add ~/.ssh/id_ed25519

After this, the user can verify that a connection can be made to the remote/server using this command (if the user has not done so before):

$ ssh username@remote_host

...

The authenticity of host '<remote_host> (<remote_host_ip>)' can't be established.

ECDSA key fingerprint is SHA256:OzvpQxRUzSfV9F/ECMXbQ7B7zbK0aTngrhFCBUno65c.

Are you sure you want to continue connecting (yes/no)?

If the user types yes, the SSH client writes the host public key to the known_hosts file ~/.ssh/known_hosts and won’t prompt the user again on the subsequent SSH connections to the remote_host. If the user doesn’t type yes, the connection to the remote_host is prevented. This interactive method allows for server verification to prevent man-in-the-middle attacks

So if you wanted to automate this process: User interaction is essentially limited during automation (CI). So to So another way of testing the connection would be to use the ssh-keyscan utility as shown below:

$ ssh-keyscan -H <remote_host> >> ~/.ssh/known_hosts

The command above adds all the <remote_host> public keys and hashes of the hostnames of the public keys to the known_hosts file. Essentially ssh-keyscan just automates the process of retrieving the public key of the remote_host for inclusion in the known_hosts file without actually logging into the server.

So to run commands on the remote host through ssh, the user can run this command:

$ ssh -o StrictHostKeyChecking=no -i ~/.ssh/id_ed25519 <remote-host>@<remote-host-ip> "<command>"

The StrictHostKeyChecking=no tells the SSH client on the local (user's computer) to trust the <remote-host> key and continue connecting. The -i is used because we are not using the `ssh-agent- to manage the private key and so will have to tell the SSH client on the local (user's computer) where our private key is located.

WRITING THE PIPELINE YML

So I followed in the instructions here. So the general pipeline syntax for 0.15.x is:

pipeline:

<step-name-1>:

image: <image-name of container where the commands witll be run>

commands:

- <First-command>

- <Second-command>

secrets: [ <secret-1>, ... ]

when:

event: [ <events from push, pull_request, tag, deployment, cron, manual> ]

branch: <your branch>

<step-name-2>:

...

The commands are run as a shell script. So the commands are run with this command-line approximation:

$ docker run --entrypoint=build.sh <image-name of container where the commands witll be run>

The when section is a set of conditions. The when section is normally attached to a stage or a pipeline. If the when section is attached to a stage and the set of conditions of the when section are satisfied, then the commands belonging to that stage are run. The same applies for a pipeline.

The final pipeline is shown below:

pipeline:

build:

image: python:3.11-slim

commands:

- apt-get update

- apt-get install -y --no-install-recommends build-essential gcc make rsync openssh-client && apt-mark auto build-essential gcc make rsync openssh-client

- command -v ssh

- mkdir -p ~/.ssh

- chmod 700 ~/.ssh

- echo "$${BLOG_HOST_SSH_PRIVATE_KEY}" > ~/.ssh/bloghost

- echo "$${BLOG_HOST_SSH_PUBLIC_KEY}" > ~/.ssh/bloghost.pub

- chmod 600 ~/.ssh/bloghost

- chmod 600 ~/.ssh/bloghost.pub

- ssh-keyscan -H $${BLOG_HOST_IP_ADDR} >> ~/.ssh/known_hosts

- ls -lh ~/.ssh

- cat ~/.ssh/known_hosts

- python -m venv /opt/venv

- export PATH="/opt/venv/bin:$${PATH}"

- export PYTHONDONTWRITEBYTECODE="1"

- export PYTHONUNBUFFERED="1"

- echo $PATH

- ls -lh

- pip install --no-cache-dir -r requirements.txt

- make publish

- ls output

- rsync output/ -Pav -e "ssh -o StrictHostKeyChecking=no -i ~/.ssh/bloghost" $${BLOG_HOST_SSH_USER}@$${BLOG_HOST_IP_ADDR}:/home/$${BLOG_HOST_SSH_USER}/contoutput

- ssh -o StrictHostKeyChecking=no -i ~/.ssh/bloghost $${BLOG_HOST_SSH_USER}@$${BLOG_HOST_IP_ADDR} "cd ~; sudo cp -r contoutput/. /var/www/iratusmachina.com/"

- rm -rf ~/.ssh

secrets: [ blog_host_ssh_private_key, blog_host_ip_addr, blog_host_ssh_user, blog_host_ssh_public_key ]

when:

event: [ push ]

branch: main

OBSERVATIONS

-

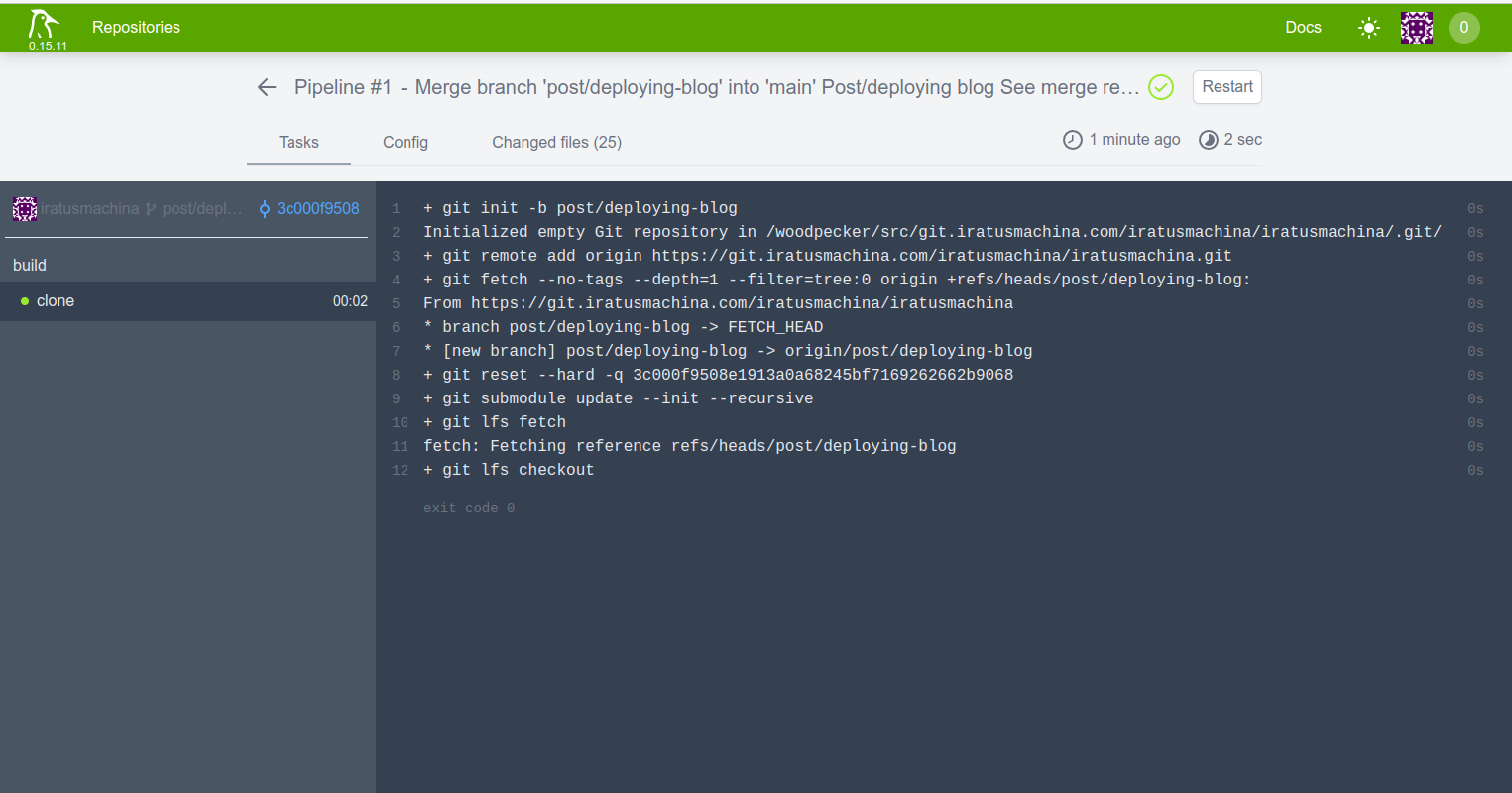

The first thing Woodpecker runs before any stage in a pipeline is the

clonestep. Thisclonestep pulls down the code from the forge (in this case, the Gitea Instance). That is, it pulls down the branch from the forge, that trigged the webhook that is registered at the Gitea Instance from Woodpecker theclonestep on evey push to remote. This branch is pulled into the volume/workspace for the commands to work on. This is shown below:

-

By attaching this

whencondition,when: event: [ push ] branch: mainto a stage, the commands in the stage get executed on a push to the branch called main. I also had the event

pull_requestas part of the event list, but I found out that means that the stage run on a creation of a PR into main which is not what I want. If I had some tests, thepull_requestwould come in handy. -

I tried to split the commands into different stages but it does not work as shown here

Changes to files are persisted through steps as the same volume is mounted to all steps

I think it means that if you installed something in a previous stage in a pipeline, the command you installed would not be available in the next stage in that pipeline. The Changes to files refer to the files created/modified/deleted directly by commands in the stage.

-

Global

whendo not seem to work for woodpecker v0.15. A globalwhenis something like this (taken from the previous example):when: event: [ <events from push, pull_request, tag, deployment, cron, manual> ] branch: <your branch> pipeline: <step-name-1>: image: <image-name of container where the commands witll be run> commands: - <First-command> - <Second-command> secrets: [ <secret-1>, ... ] <step-name-2>: ...So a global

whenapplies to all the stages in the pipeline. In my case, it appears thewhencondition only applied only if associated with a stage. -

Initially i tried to use run the pipeline defined above with a key pair (passphrase-based). But i ran into connection issues. Using a passphrase-less key pair solved my connection issues.

-

I tried to pass my login user's password over ssh to run commands as part of the sudo group. But I keep getting errors like these:

[sudo] password for ********: Sorry, try again. [sudo] password for ********: sudo: no password was provided sudo: 1 incorrect password attemptSo instead, I made it so that my login user would not require sudo to run the command that I wanted to run. I did this by editing the sudoers file instead.

$ su - $ cp /etc/sudoers /root/sudoers.bak $ visudo $ user ALL=(ALL) NOPASSWD:<command-1>,<command-2> # Add the line at the end of file $ exit

ENHANCEMENTS

-

Generate the ssh keys for deploying in CI and use them to deploy the blog. That way, it is more secure than the correct method. i would have to delete the generated key from the public server to avoid the known_hosts file from getting too big.

-

Eliminate calling the ubuntu servers/python servers by providing a user-defined image where I would have installed all the software I need (openssh, Pelican, rsync). This would reduce points of failure as well as enabling me to split the stages in the pipeline for better readbility

-

Upgrade to Woodpecker 1.0x from 0.15x